Introduction

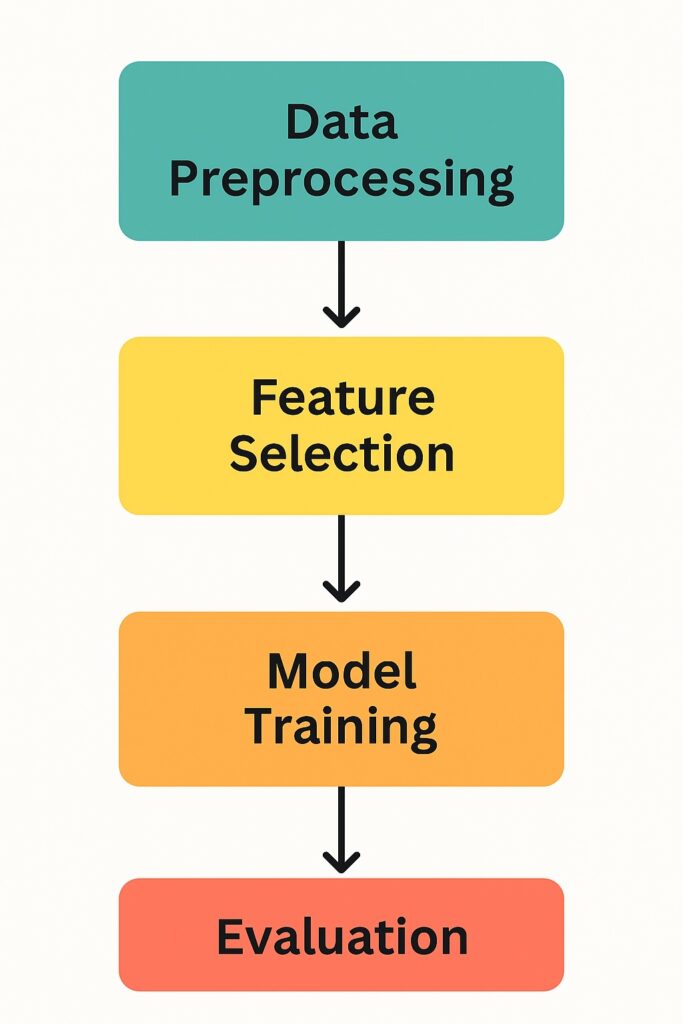

Feature selection is a critical stage of the machine learning pipeline, with the sklearn library a popular choice. Often, real-world data has many variables, where some are highly relevant but others are not. Worse than that, they are often distracting and introduce noise, hampering ML model training. Feature selection is the process of filtering only the relevant values for both ML model training and inference. It contributes to reducing noise and complexity by removing redundant or irrelevant features from the dataset. Therefore, it improves model performance, interpretability, and training efficiency.

There are further benefits with feature selection, especially when using sklearn. By reducing the number of input features, we reduce the risk of overfitting. This allows the model to better generalize with unseen data. Also, when selected features are used for training, then models converge quicker and require less computational power. Another important benefit is that feature selection eliminates irrelevant signals that confuse the learning algorithm.

The sklearn library provides classes that support feature selection, including SelectKBest, RFE, and SelectFromModel. These tools also integrate seamlessly with pipelines that allow for efficient experimentation and tuning. These libraries enable the application of filter, wrapper, or embedded methods with only a few lines of code.

1. What Is Feature Selection?

We have briefly considered feature selection with sklearn, but now want to properly define feature selection. Feature selection is the process that identifies which input variables contribute most to predicting the target outcome. It simplifies the ML model by focusing only on the features that contribute meaningfully to the predicted value. Its goal is to enhance learning efficiency by eliminating data that contributes little or no insight to the model. By eliminating irrelevant features, feature selection helps reduce noise and overfitting of data.

Another data preprocessing activity is dimensionality reduction, which creates new features by combining existing ones. This is different from feature selection, which maintains a subset of the original features. Feature selection also preserves the original variables and maintains interpretability. This is in contrast to dimensional reduction, which transforms these variables. Another key difference is that dimensional reduction techniques like PCA project data into a lower-dimensional space. In contrast, feature selection simply filters out less useful features, especially using sklearn.

2. Why Feature Selection Matters in ML

An essential benefit of feature selection with sklearn is that it reduces model complexity. A central tenet of ML engineering is to look for every opportunity to reduce model complexity. Models that are simpler will have fewer parameters to estimate and are easier to interpret, debug, and maintain. They also help prevent overfitting, especially when working with small or noisy datasets.

Furthermore, when we remove irrelevant or redundant features, our models will focus on the most informative signals. This, in turn, will boost predictive accuracy. Also, we will reduce training time and memory usage, making our algorithms operate more efficiently.

Also, when we have fewer features, we can better determine which inputs are driving model predictions. Having this clarity will support better decision-making, which is especially crucial in regulated domains like finance or healthcare. In these domains, explainability is crucial.

High-dimensional datasets are another critical concern, as they often lead to overfitting. In such cases, feature selection with sklearn helps by reducing input size and mitigating overfitting risk.

For a broader look at how managed services like Amazon SageMaker handle scalable model training and deployment, see our guide on SageMaker for ML Engineers.

3. Feature Selection Methods in Sklearn

Sklearn contains several categories of feature selection methods, including filter methods, wrapper methods, and embedded methods.

a. Filter Methods – SelectKBest

These methods rank features in terms of relevancy to select the best features to keep for training the model. They rank features by applying a statistical test to each feature independently of the model. Next, they select the top k features based on the highest scores returned by the chosen test function.

When selecting features for classification models, tests like chi2 (chi-squared) and f_classif (ANOVA F-value) are often used. chi2 is most suitable for non-negative values and measures the dependence between categorical features and the target. f_classif uses variant analysis to evaluate linear relationships between numeric features and class labels. An example using these classification functions is provided below:

from sklearn.datasets import load_iris from sklearn.feature_selection import SelectKBest, chi2, f_classif import pandas as pd # Load dataset X, y = load_iris(return_X_y=True) # Create DataFrame for readability feature_names = load_iris().feature_names X_df = pd.DataFrame(X, columns=feature_names) # Apply SelectKBest with chi2 selector_chi2 = SelectKBest(score_func=chi2, k=2) X_new_chi2 = selector_chi2.fit_transform(X_df, y) # Apply SelectKBest with f_classif selector_fclass = SelectKBest(score_func=f_classif, k=2) X_new_fclass = selector_fclass.fit_transform(X_df, y) # Display top features selected by each method print("Top 2 features using chi2:") print(X_df.columns[selector_chi2.get_support()].tolist()) print("\nTop 2 features using f_classif:") print(X_df.columns[selector_fclass.get_support()].tolist())

Sklearn’s SelectKBest methods for feature selection also support regression models. SelectKBest measures the strength of linear relationships between features and continuous targets. It measures this strength through statistical tests, and the most commonly used feature is f_regression. f_regression computes the F-value between each feature and the target variable. This explains the variance in the target using univariate linear regression. f_regression is best used when you’re confident the relationship is linear and the dataset is clean. This function is fast and lightweight.

Another function for regression models is mutual_info_regression, which estimates mutual information. This is achieved through capturing any type of relationship between features and the target that is either linear or non-linear. Use this instead of f_regression when relationships are either complex, non-linear, or not easily determined. It is slower than f_regression, especially on large datasets.

An example using these regression functions is provided below:

from sklearn.datasets import load_diabetes from sklearn.feature_selection import SelectKBest, f_regression, mutual_info_regression import pandas as pd # Load dataset X, y = load_diabetes(return_X_y=True) # Create DataFrame for readability feature_names = load_diabetes().feature_names X_df = pd.DataFrame(X, columns=feature_names) # Apply SelectKBest with f_regression selector_f = SelectKBest(score_func=f_regression, k=5) X_new_f = selector_f.fit_transform(X_df, y) # Apply SelectKBest with mutual_info_regression selector_mi = SelectKBest(score_func=mutual_info_regression, k=5) X_new_mi = selector_mi.fit_transform(X_df, y) # Display top features selected by each method print("Top 5 features using f_regression:") print(X_df.columns[selector_f.get_support()].tolist()) print("\nTop 5 features using mutual_info_regression:") print(X_df.columns[selector_mi.get_support()].tolist())

b. Wrapper Methods – Recursive Feature Elimination (RFE)

Another approach to feature selection with sklearn is assessing different combinations of features. This is achieved by actually training and testing a model on these combinations. The name given to this class of feature selection is wrapper methods. RFE is a wrapper method that recursively assesses a model and removes the least important feature at each iteration. It trains the model multiple times, each time with a smaller subset of features to evaluate their impact on performance. RFE continues iterating until the desired number of features is reached.

RFE can wrap around LogisticRegression or RandomForestClassifier by using their built-in .coef_ or .feature_importances_ attributes. These rank and recursively eliminate less relevant features. Pass the estimator to RFE, specify the number of features to select, and fit it to your data. It will then iterate through until it reaches your desired feature size.

Below are the balanced pros and cons of using RFE:

| ✅ Pros | ⚠️ Cons |

|---|---|

| Evaluates features in the context of the model, capturing interactions that filter methods miss. | Requires multiple model training cycles, which can be time-consuming on large datasets. |

| Often improves model accuracy by selecting only the most relevant features. | Results may vary depending on the base model’s sensitivity to data and randomness. |

| Compatible with many estimators such as LogisticRegression and RandomForestClassifier. | Not well-suited for high-dimensional data unless computational resources are sufficient. |

c. Embedded Methods – SelectFromModel

Sklearn can also support feature selection through training the model with the entire feature set. It provides the SelectFromModel methods that examine the trained model weights to determine the most relevant features. For linear models, .coef_ attribute quantifies a feature’s relevance. In the case of tree-based models, the .feature_importances_ attribute quantifies the relevance of each feature. Features are selected whenever these values are above a certain threshold. Setting this threshold is either done manually or the scikit-learn chooses a default. This default is usually the mean or median of important scores.

SelectFromModel then only keeps the features whose relevance exceeds the threshold, allowing dimensionality reduction while preserving predictive power. It works seamlessly with many estimators. Linear estimators include Lasso, Ridge, and LogisticRegression, where .coef_ signifies feature relevance. Tree-based estimators include RandomForestClassifier, GradientBoostingRegressor, where .feature_importances_ signifies feature relevance.

After selecting the most relevant features, you should retrain your final model on the reduced dataset. This ensures that it learns from the optimized input space.

Below is an example of using SelectFromModel with RandomForestClassifier:

from sklearn.ensemble import RandomForestClassifier from sklearn.feature_selection import SelectFromModel from sklearn.datasets import load_iris import pandas as pd # Load dataset X, y = load_iris(return_X_y=True) feature_names = load_iris().feature_names X_df = pd.DataFrame(X, columns=feature_names) # Fit a RandomForest model model = RandomForestClassifier(n_estimators=100, random_state=42) model.fit(X, y) # Use SelectFromModel to reduce features selector = SelectFromModel(estimator=model, threshold='mean', prefit=True) X_reduced = selector.transform(X_df) # Show selected features selected_features = X_df.columns[selector.get_support()] print("Selected features based on feature_importances_ ≥ mean:") print(selected_features.tolist())

4. Code Walkthrough: Comparing Sklearn Feature Selection Methods (300–350 words)

Each set of Sklearn feature selection methods has various pros and cons associated with it. They are also applicable to different use cases and scenarios. Here we compare several side by side to derive further insights into their strengths and weaknesses, and applicability.

Iris Dataset

To compare Skleran feature selection methods, we will use the Iris Dataset that only has 150 samples. It does not have missing values, and it is ideal for our purposes without any data cleaning. The Iris dataset comprises interpretable features that are four well-known botanical measurements that are easy to visualize and understand. Since it is not a binary-only dataset but supports multiclass classification then it is highly useful for evaluating Sklearn methods. Each has 50 samples, making it a balanced and well-structured problem suitable for evaluating ML methods. The scikit-learn framework includes this dataset, making it ideal for learning, testing, and benchmarking ML techniques like Sklearn feature selection.

Feature Selection Step-by-Step

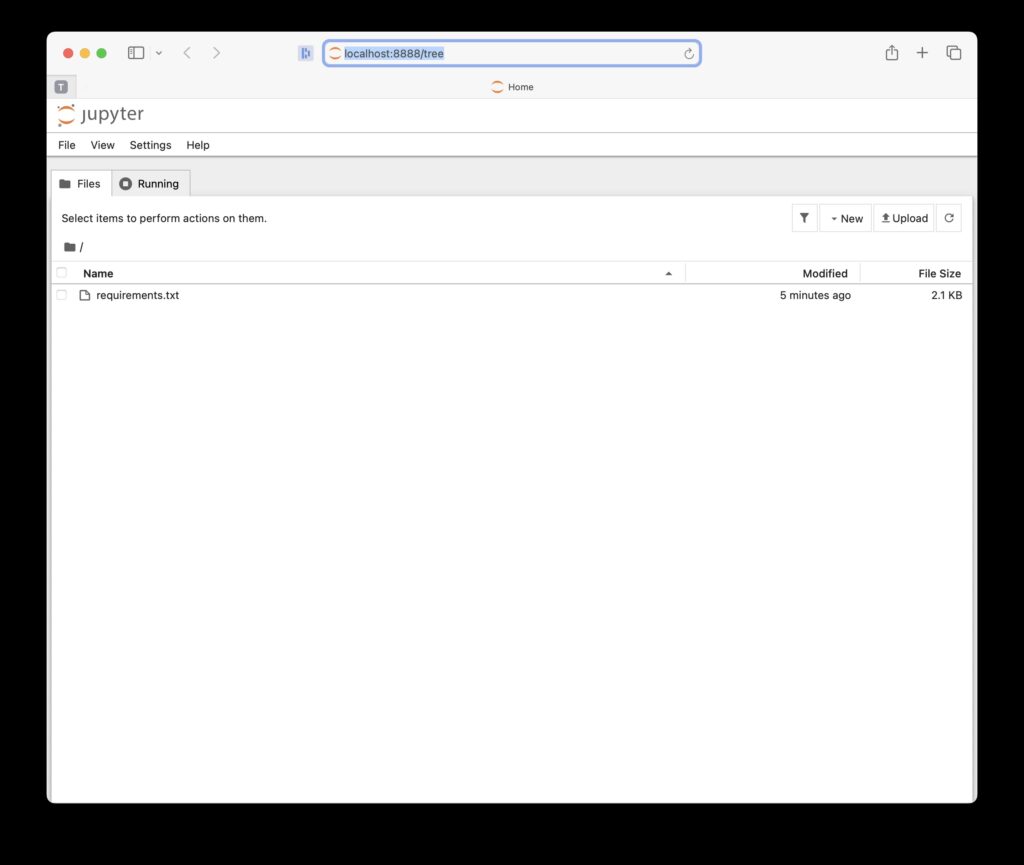

Now that we have a suitable dataset for evaluating different sklearn feature selection methods, we will evaluate these methods. These evaluations were done on a MacBook Pro with M4 Pro Max, 48 GB of RAM, and 1TB SSD.

Starting Point

We set up a Python virtual environment for easy cleanup when done and a safe space to experiment. We also have all our packages isolated, which is essential when doing ML projects on a MacBook.

Once we have set up a virtual environment, we upgrade our pip and install the following libraries:

- numpy

- pandas

- scikit-learn

- matplotlib

- jupyter

Jupyter Notebook

Next, we run the Jupyter notebook to interactively evaluate sklearn methods by typing:

jupyter notebook

This launches a browser with the local Jupyter notebook as the web page.

We create a new notebook using Python 3 (ipykernel)

Load the Iris dataset using the code below:

from sklearn.datasets import load_iris

import pandas as pd

data = load_iris()

X = pd.DataFrame(data.data, columns=data.feature_names)

y = data.target

X.head()

Comparing Methods

We will now compare the following methods side by side:

- SelectKBest (Filter)

- RFE (Wrapper)

- SelectFromModel (Embedded)

Therefore, we tested the above methods interactively, one at a time.

SelectKBest

We ran the following code in a new notebook cell

from sklearn.feature_selection import SelectKBest, f_classif

# Apply SelectKBest with ANOVA F-value

skb_selector = SelectKBest(score_func=f_classif, k=2)

X_skb = skb_selector.fit_transform(X, y)

# Display selected feature names

selected_skb = X.columns[skb_selector.get_support()]

print("Selected features using SelectKBest (f_classif):")

print(selected_skb.tolist())

And it selected the following features:

print("Selected features using SelectKBest (f_classif):")

print(['petal length (cm)', 'petal width (cm)'])

RFE (Wrapper) using Logistic Regression

We then ran this code in Notebook:

from sklearn.feature_selection import RFE

from sklearn.linear_model import LogisticRegression

# Initialize model

model = LogisticRegression(max_iter=1000)

# Apply RFE

rfe_selector = RFE(estimator=model, n_features_to_select=2)

rfe_selector.fit(X, y)

# Display selected feature names

selected_rfe = X.columns[rfe_selector.get_support()]

print("Selected features using RFE with LogisticRegression:")

print(selected_rfe.tolist())

Once this was run, it selected the following features :

Selected features using RFE with LogisticRegression: ['petal length (cm)', 'petal width (cm)']

SelectFromModel (Embedded) using RandomForestClassifier

We then ran this code in Notebook:

from sklearn.ensemble import RandomForestClassifier

from sklearn.feature_selection import SelectFromModel

# Fit a RandomForest model

rf_model = RandomForestClassifier(n_estimators=100, random_state=42)

rf_model.fit(X, y)

# Select important features based on feature_importances_

sfm_selector = SelectFromModel(estimator=rf_model, threshold="mean", prefit=True)

X_sfm = sfm_selector.transform(X)

# Display selected feature names

selected_sfm = X.columns[sfm_selector.get_support()]

print("Selected features using SelectFromModel (RandomForest):")

print(selected_sfm.tolist())

Once this was run, it selected the following features :

Selected features using SelectFromModel (RandomForest):

['petal length (cm)', 'petal width (cm)']

Comparison of Methods

We summarize the results above in the table below, comparing each method based on the results of testing them in our Notebook.

| Feature Selection Method | Top Features Selected |

|---|---|

| SelectKBest (f_classif) | petal length (cm) petal width (cm) |

| RFE (LogisticRegression) | petal length (cm) petal width (cm) |

| SelectFromModel (RandomForest) | petal length (cm) petal width (cm) |

Consequently, we observed that all three methods selected the same two features:

- petal length

- petal width

From above, the key observation was that model accuracy remained high even after reducing input features. Also, feature selection leads to faster model training with lower memory use; therefore, we have improved training efficiency without sacrificing performance. While we had a simple model, we can see the benefit of feature selection.

6. Common Pitfalls and Best Practices

A common and serious mistake when using sklearn for feature selection is when it is done before splitting the data. Training data must be separated from validation data when training models, and that includes feature selection. This is especially true for wrapper and embedded methods that use model training for feature selection. Including validation in this step will cause it to be indirectly learned by the model. This will lead to issues of overfitting and incorrect validation results. In summary, feature selection must be performed on the training set only, and its effectiveness evaluated on the validation data, just like model training.

We have already explored feature selection in this article. However, by no means do we want you to go away thinking that this is the sole preprocessing step. There are other critical preprocessing steps you should also include as part of your ML workflow.

A common trap when using Sklearn model-based feature selection methods is failing to normalize features. Unnormalized features can dominate others for models dependent upon feature magnitude. This can lead to the model assigning disproportionate importance and reducing overall accuracy. This issue also affects feature selection, where unnormalized features can mislead model-based selectors that rely on magnitude. This is especially true for those using coefficients.

7. Final Thoughts and Next Steps

Selecting features through sklearn reduces irrelevant features or features that have minimal impact on the insights from the data. Other features introduce noise that reduces ML model performance. Another benefit is a smaller dataset used for training and inference, improving model performance and reducing resource usage. This includes memory and CPU cycles.

An important part of learning about feature selection is to experiment with your own datasets and observe the benefits. This also provides experience with tweaking and adjusting, which is a crucial skill with ML development. We have provided a simple example here, and a sufficiently powered laptop will allow hands-on learning. References are added at the end along with further reading to explore this topic.

8. Further Reading

If you’re ready to go beyond the basics and build deeper intuition for feature selection and data preprocessing, the following books are excellent starting points. These links are affiliate links, which means we may earn a small commission if you purchase through them — at no extra cost to you. Thank you for your support!

Hands-On Machine Learning with Scikit-Learn, Keras, and TensorFlow (3rd Edition)

A comprehensive, hands-on guide for modern ML workflows. Covers feature selection, model tuning, and deep learning with practical examples.

Python Feature Engineering Cookbook

A focused guide on building powerful features to improve model performance — packed with actionable recipes.

Applied Machine Learning and High-Performance Computing on AWS

Ideal for readers working with big data and cloud infrastructure. Learn how to scale ML pipelines and optimize processing.

Disclosure: As an Amazon Associate, AI Cloud Data Pulse earns from qualifying purchases.

9. References

- Scikit-Learn Documentation – Feature Selection. https://scikit-learn.org/stable/modules/feature_selection.html

- Scikit-Learn Feature Selection API Reference. https://scikit-learn.org/stable/modules/classes.html#module-sklearn.feature_selection

- Pandas Documentation. https://pandas.pydata.org/docs/

- NumPy Documentation. https://numpy.org/doc/

- Jupyter Documentation https://jupyter.org/documentation