Introduction

Our earlier articles demonstrated that TensorFlow is one of the best frameworks available for deep learning. TensorFlow helps developers build neural networks that enable learning complex data patterns. The Keras API complements this framework, making model building simple for beginners. Neural networks, including convolutional and recurrent networks, further enhance this framework’s capabilities. Training improves model accuracy and performance. This article is a practical guide for How to Build TensorFlow Models for Beginners, covering the fundamentals of building, training, and evaluating these models.

Section 1: Introduction to Building and Training TensorFlow Models

1. Understanding Neural Networks

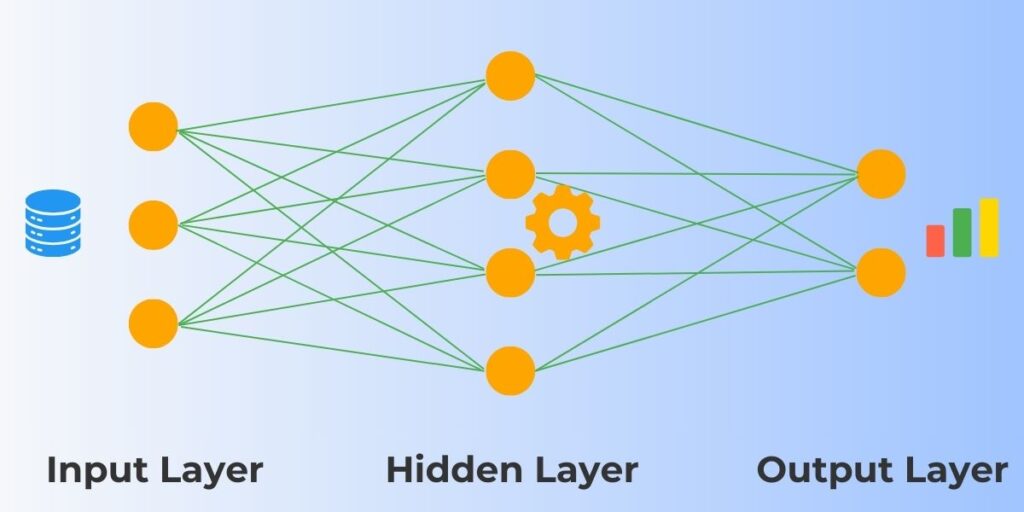

Neural networks have become an important Artificial Intelligence technique because they can extract complex patterns from large datasets. Their architecture consists of interconnected layers and neurons imitating biological nervous systems that excel at pattern recognition.

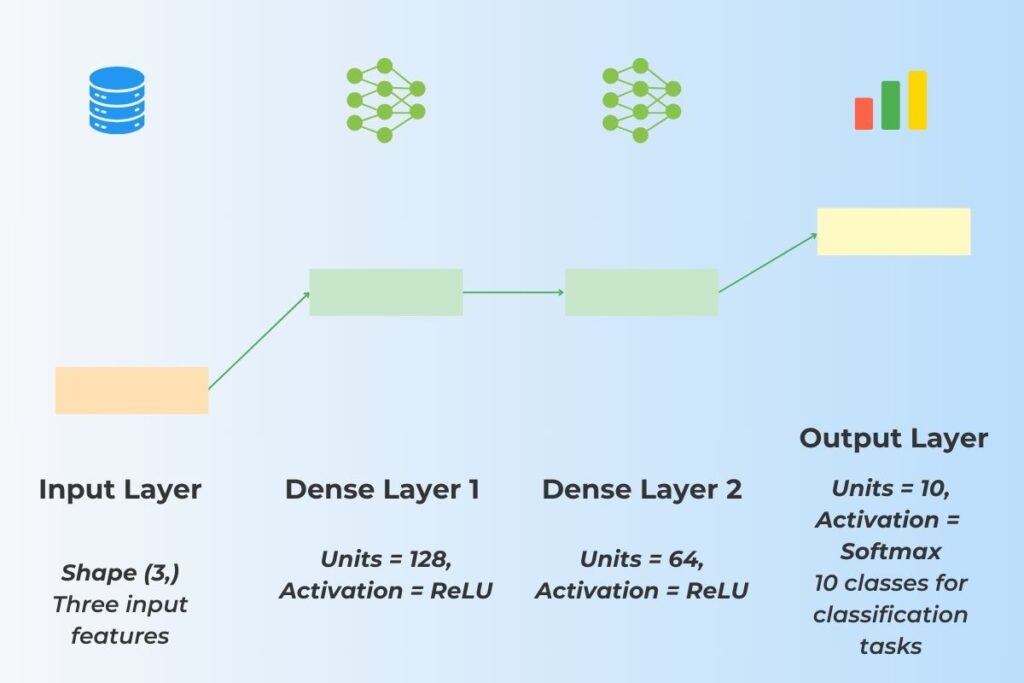

To better understand this concept, here’s a simple illustration of a neural network, showing the input layer, hidden layers, and output layer, along with their connections

This structure gives neural networks a significant advantage in capturing data features for accurate learning. Organizations can use them in diverse applications, including image processing and text analysis.

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense

# Define a simple neural network with the Sequential model.

model = Sequential([

# Input layer with 3 neurons.

Dense(units=3, activation='relu', input_shape=(3,)),

# Hidden layer with 4 neurons.

Dense(units=4, activation='relu'),

# Output layer with 1 neuron.

Dense(units=1, activation='sigmoid')

])

# Display the model's architecture.

model.summary()

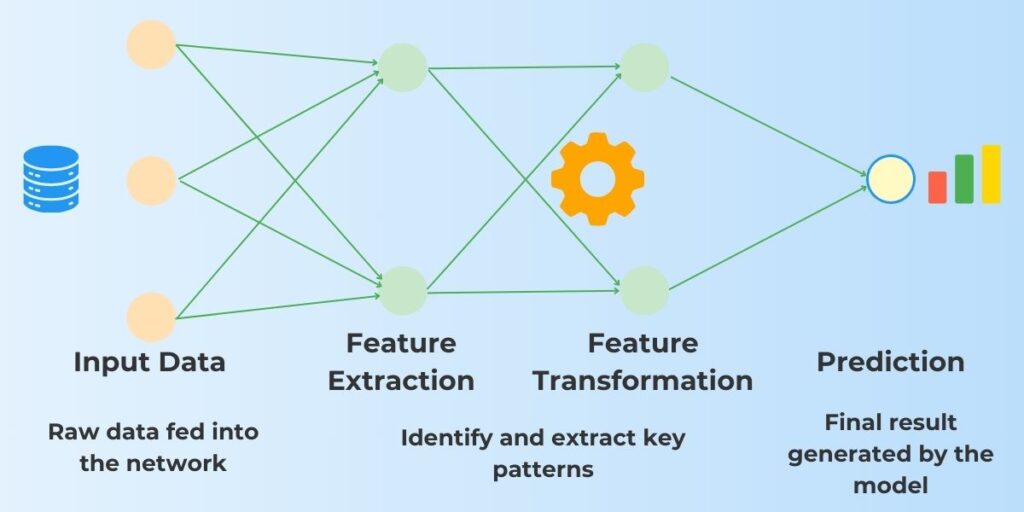

2. Key Components in Building and Training TensorFlow Models

TensorFlow facilitates building and training neural networks through several key building blocks. First are dense layers that enable neurons to perform intricate data analysis. Next are activation functions that will allow non-linear transformations. Another key component is optimizers that adjust weights for more reliable predictions. Also, there are loss functions that measure model accuracy. However, this list is not exhaustive; it includes some well-known components. These components form the foundation for how to build TensorFlow models for beginners by simplifying the process and making complex architectures more approachable.

3. Benefits of TensorFlow for Building and Training Models

TensorFlow simplifies neural network creation and training for developers with easy-to-use APIs. It also supports diverse architectures like CNNs and RNNs, making its framework versatile for various applications. Importantly, it provides scalability for big data projects, an increasing problem domain for organizations. When working with large-scale data, combining TensorFlow with Apache Spark can further enhance scalability and performance. Learn more about optimizing Spark performance and speed to maximize efficiency in big data workflows. It also enhances developer productivity through rapid model prototype tools, allowing developers to test different models quickly. By following these steps, beginners can grasp how to build TensorFlow models for beginners and confidently create sequential models tailored to their data needs.

4. Challenges in Building and Training TensorFlow Models

However, there are several challenges when building and training neural networks. These common challenges in building TensorFlow models are data availability, dataset quality, and computational resource limitations. It is necessary to provide large datasets where it is often difficult to gather the required data. Another challenge is dataset quality since limited or imbalanced data can often lead to overfitting in neural networks. Complex models require high computational resources for their training, another key challenge. These are just a few examples of the common challenges in building TensorFlow models that developers face when optimizing their neural networks. However, the advent of cloud computing is considered by many as potentially addressing this challenge. Selecting the right architecture and hyperparameters is necessary, which is time-intensive. This challenge makes rapid prototyping an important capability where developers can quickly iterate over architectures and hyperparameters. Finally, beginners could experience challenges in debugging and fine-tuning models.

Section 2: Building and Training Sequential Models with Keras in TensorFlow

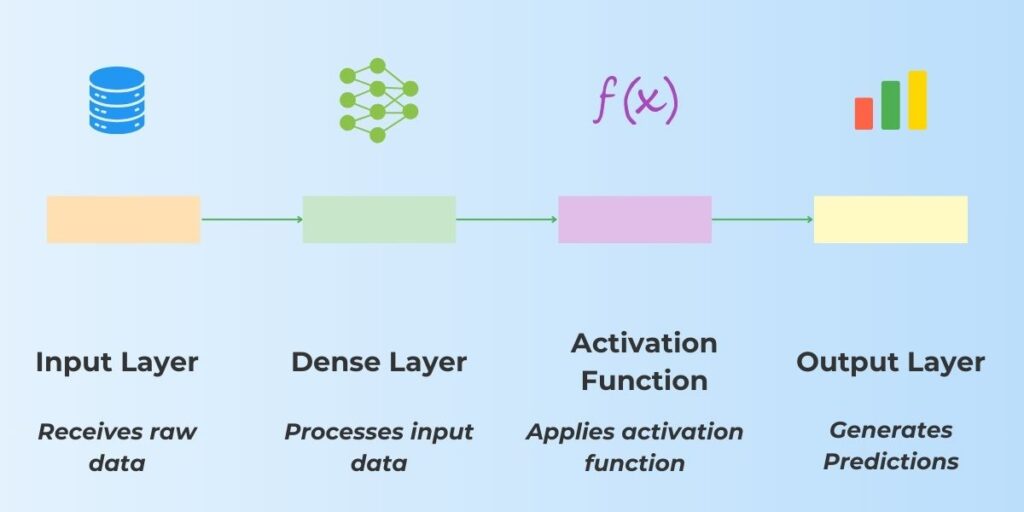

1. Overview of Keras

Keras is a user-friendly high-level API that complements TensorFlow to enable simple model creation and training. It allows developers to build sequential models stacked linearly for straightforward creation. This makes it ideal for beginners learning neural network basics. Keras also integrates well with TensorFlow’s advanced features, making it a powerful development tool for sophisticated developers. It also supports rapid prototyping, enabling developers to iterate their models quickly and making them more productive.

2. Key Features of Sequential Models in TensorFlow

TensorFlow with Keras allows developers to build sequential models with the following features. Developers can select activation functions, including ReLU and softmax, to define neuron output. The framework also allows dropout layers to prevent the overfitting challenge mentioned above. It achieves this by randomly setting a fraction of neuron inputs to zero during each training, thereby dropping out neurons. It also provides fully connected layers, allowing it to process complex patterns. Batch normalization is another feature that improves training stability.

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense

# Define a Sequential model

model = Sequential([

# Input layer and Dense Layer 1

Dense(units=128, activation='relu', input_shape=(3,)),

# Dense Layer 2

Dense(units=64, activation='relu'),

# Output layer

Dense(units=10, activation='softmax')

])

# Compile the model

model.compile(optimizer='adam',

loss='categorical_crossentropy',

metrics=['accuracy'])

# Display the model's architecture

model.summary()

3. Steps for Building and Training Sequential Models

With these tools, developers can build and train sequential models by performing the following steps. The first step is to stack layers using the Sequential API, thereby completing the model design. Developers then compile the model with optimizer and loss functions ready for training. Then, they train the model using data batches and evaluate performance with metrics like accuracy. Depending upon performance, developers will iterate these steps until they arrive at satisfactory performance metrics.

4. Advantages of Using Sequential Models

After looking at the steps for building sequential models, we will consider briefly some of their advantages. They are intuitive, making them easy for beginners to implement. Another advantage is that they are well-suited for simple linear workflows like feedforward neural networks. They also simplify debugging owing to their straightforward architecture. Another significant advantage is that they seamlessly integrate with TensorFlow’s extensive tools and libraries.

Section 3: Implementing CNNs for Building and Training TensorFlow Models

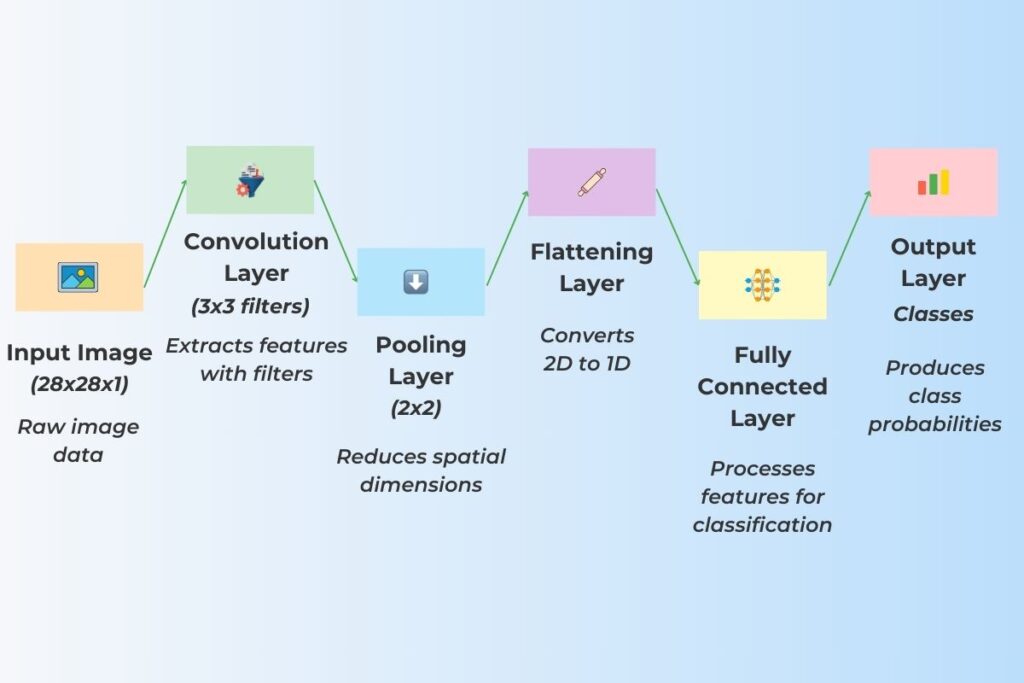

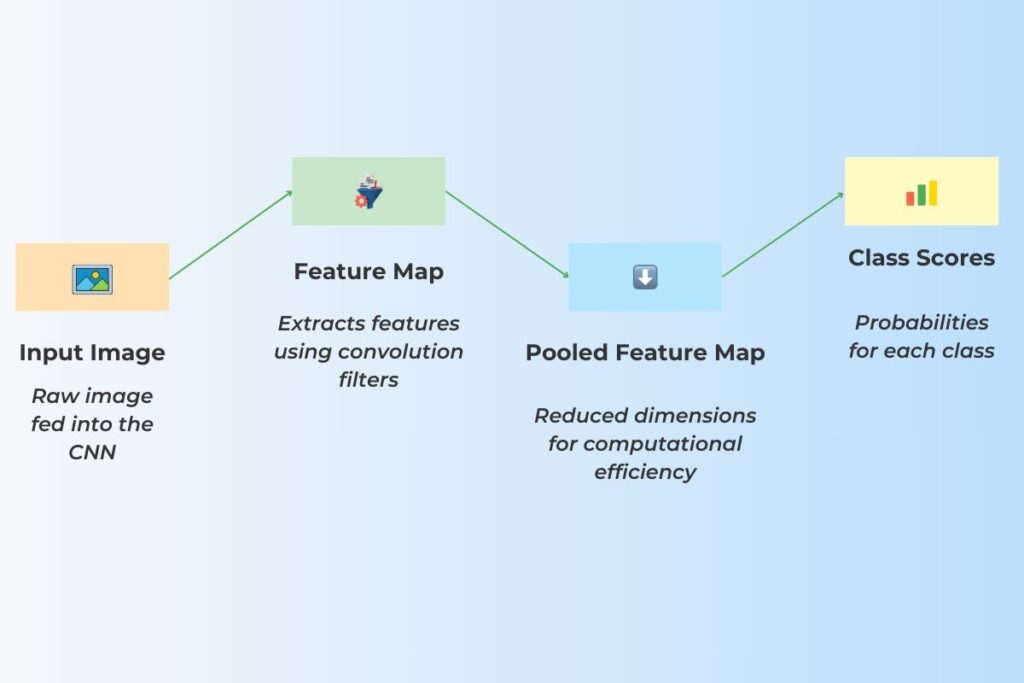

1. Introduction to CNNs in Building and Training TensorFlow Models

Several neural network architectures are available for the developer to choose from to suit their problem domain. For problem domains involving image processing, convolutional neural networks (CNNs) are a good choice. These consist of convolution layers that can detect patterns in images. They also allow pooling layers that reduce data dimensionality. In addition to pattern detection, they are suitable for image classification.

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Conv2D, MaxPooling2D, Flatten, Dense

# Define a Sequential CNN model

model = Sequential([

# Input layer: Convolutional layer with 32 filters and 3x3 kernel

Conv2D(filters=32, kernel_size=(3, 3), activation='relu', input_shape=(28, 28, 1)),

# Pooling layer to reduce spatial dimensions

MaxPooling2D(pool_size=(2, 2)),

# Flatten the feature map to feed into Dense layers

Flatten(),

# Fully connected layer

Dense(units=128, activation='relu'),

# Output layer for classification (10 classes)

Dense(units=10, activation='softmax')

])

# Compile the model

model.compile(optimizer='adam', loss='categorical_crossentropy', metrics=['accuracy'])

# Display the model's architecture

model.summary()

2. Key Layers in CNN Architectures

Several layer types in CNNs contribute to their image-processing specialty. Convolution layers apply filters that capture features from images. Another layer type is pooling layers, which downsample image data to improve efficiency. CNNs also allow fully connected layers so that they can analyze combined features. Another necessary layer type is dropout layers, which prevent overfitting in training.

3. Advanced Features of CNNs in TensorFlow

Complementing these basic layer types, TensorFlow provides several advanced features extending CNN applicability. The framework supports pre-trained modes for transfer learning. It also supports deeper network construction for improved pattern detection. Another advanced feature is that it makes CNNs flexible for tasks including object detection and segmentation. It also can optimize CNNs for scalable big data processing.

4. Common Challenges in Implementing CNNs for Building and Training TensorFlow Models

Along with these advantages, several challenges are to consider when selecting CNN architecture for neural networks. These challenges align with the common challenges in building TensorFlow models, including overfitting, memory constraints, and high computational requirements. CNNs require significant computational power when training large datasets. They are also susceptible to overfitting whenever limited or unbalanced image data is used. Another challenge is that designing optimal architectures often involves trial and error, making rapid prototyping a vital technique. Developers must also understand that processing high-dimensional data can lead to memory constraints during training.

Section 4: Using RNNs for Building and Training TensorFlow Models

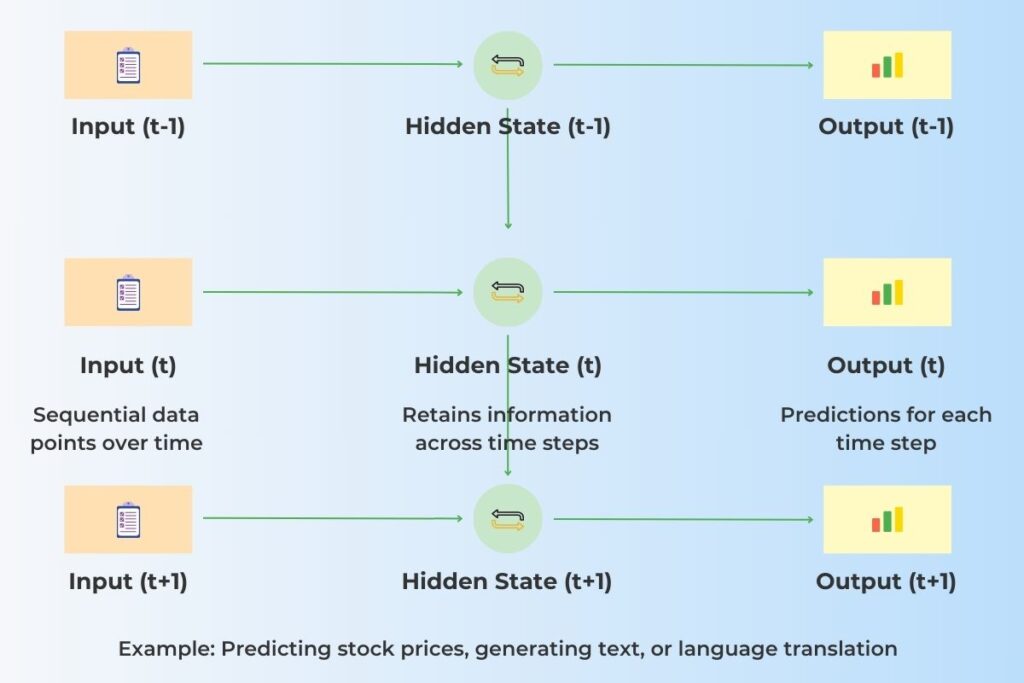

1. Overview of RNNs in Building and Training TensorFlow Models

While CNNs are optimized for image processing, RNNs are designed to analyze sequential data. Their design consists of the ability to retain information from previous time steps. This makes them useful for tasks including time series and language monitoring. TensorFlow supports various RNN layers when building neural networks.

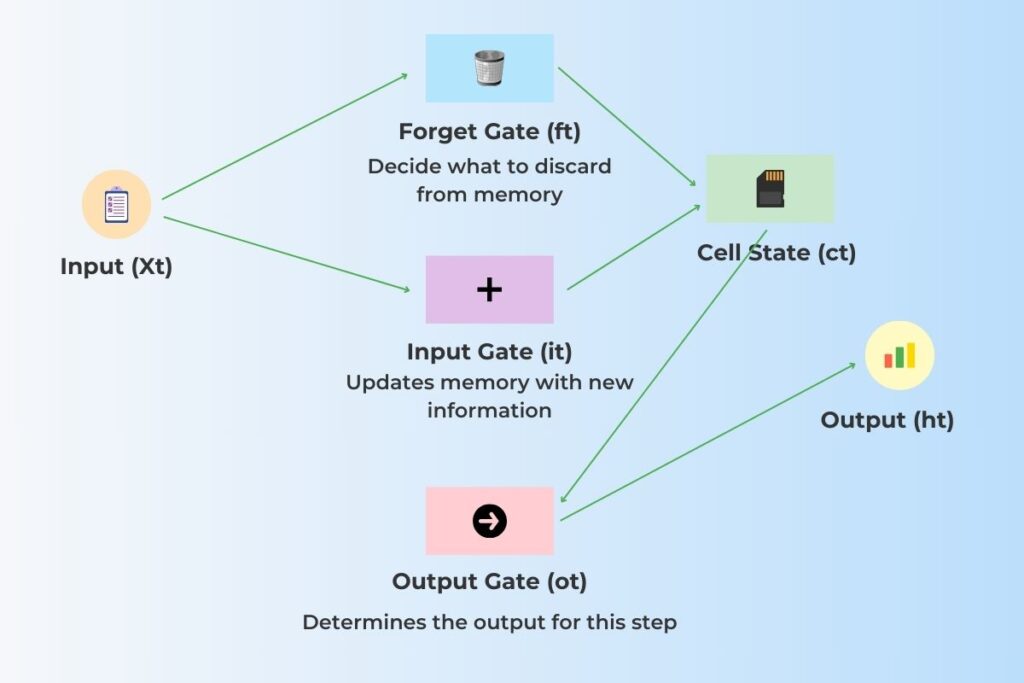

2. Key Architectures for Building and Training TensorFlow RNN Models

RNN models provide several components for constructing neural networks optimized for analyzing sequence data. SimpleRNN is used for basic sequence modeling. However, when networks need to handle long-term dependencies, long short-term memory networks (LSTM) are required. When a simplified approach is necessary, gated recurrent units (GRU) are an alternative to LSTM. TensorFlow also allows stacked RNN layers to process more complex patterns.

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import LSTM, Dense

# Define a Sequential RNN model with LSTM for time series prediction

model = Sequential([

# LSTM layer with 50 units, expecting input sequences of length 10

# with 1 feature at each time step.

LSTM(units=50, input_shape=(10, 1), return_sequences=True),

# Dense output layer with 1 unit and linear activation for predicting

# the next value in the time series.

Dense(units=1, activation='linear')

])

# Compile the model with Adam optimizer and mean squared error loss.

model.compile(optimizer='adam', loss='mse', metrics=['mae'])

# Display the model's architecture.

model.summary()

3. Applications of RNNs in TensorFlow

As mentioned, RNNs are suited for sequential data processing, including time series and language monitoring. More specifically, they apply to language translation and text generation. An example of a time series is stock market prediction with sequential data. Other language applications include sentiment analysis for natural language tasks. Also, these models are useful for music and speech generation models.

4. Challenges in Using RNNs for Building and Training TensorFlow Models

RNNs have challenges similar to CNNs, but some are unique to RNNs. RNNs can suffer from vanishing or exploding gradient problems during training. Also, training on long sequences requires significant computational resources. Cloud computing, including Google Colab and AWS Sagemaker, may help address this. Like CNNs, overfitting can occur, often with small or imbalanced datasets. Another challenge is hyperparameter tuning is often time-consuming, whereas rapid prototyping can help. Examples of hyperparameters include learning rate and sequence length.

Section 5: Training and Evaluating TensorFlow Models

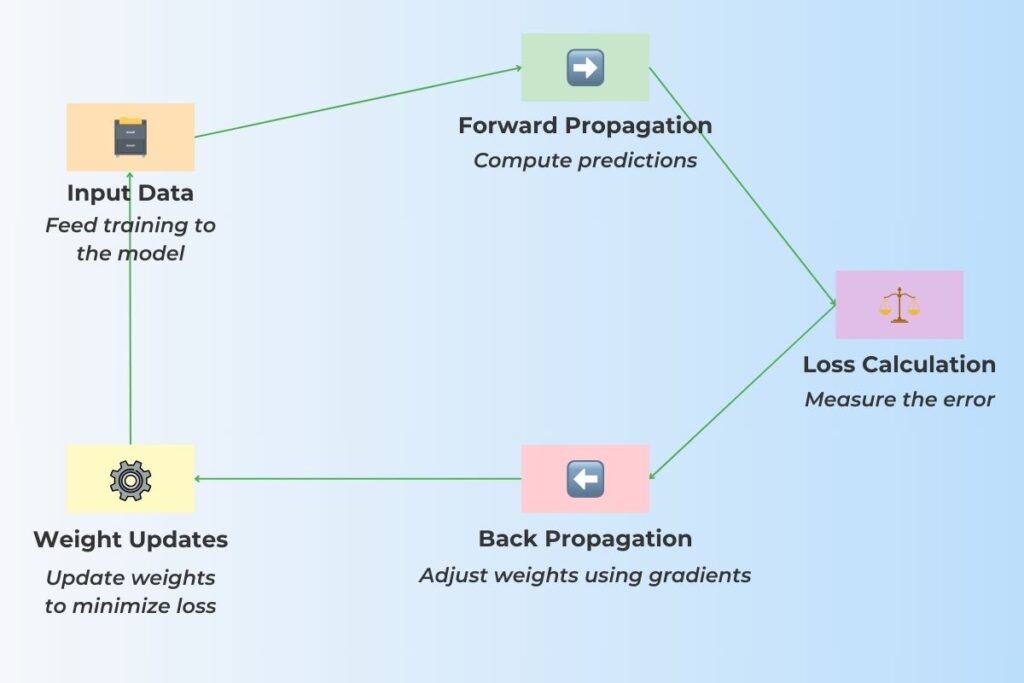

1. Training TensorFlow Models for Better Accuracy

We have explored many advantages of using TensorFlow and Keras. However, it is still essential that we utilize these tools effectively without squandering their advantages. Adopting best practices for training TensorFlow neural networks, such as data augmentation, batch normalization, and gradient clipping, can significantly improve training outcomes. It is imperative to train models with data batches instead of the entire data set to update weights iteratively. Another essential technique is updating gradients using optimizers like Adam. Developers must adjust learning rates to improve performance while monitoring loss for overfitting or underfitting.

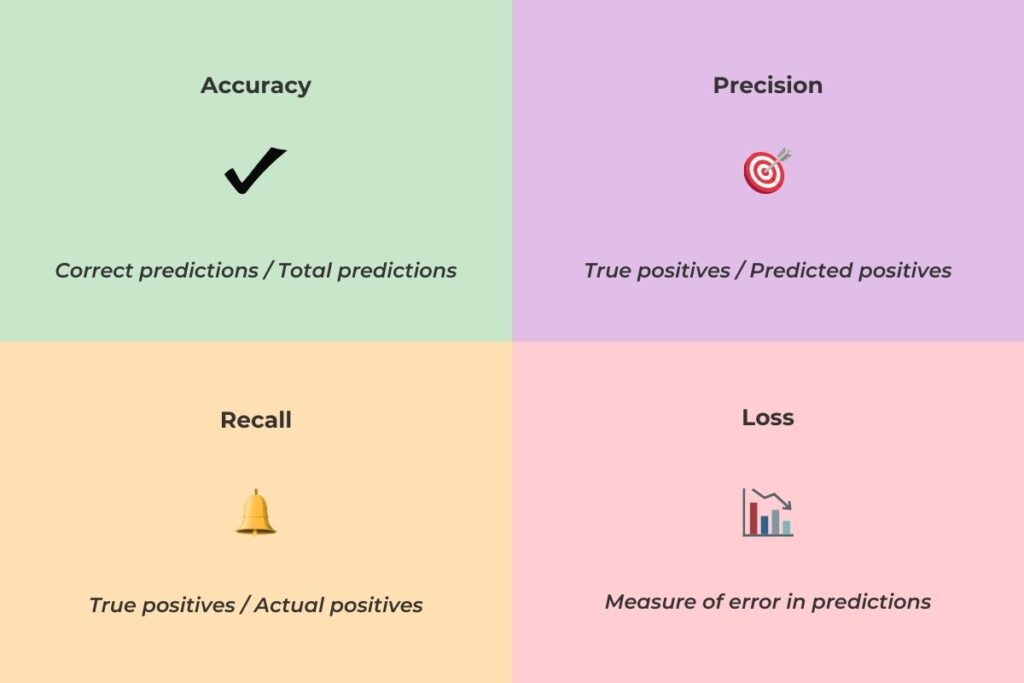

2. Evaluating TensorFlow Models During and After Training

Developers must also continually evaluate TensorFlow models during and after training and use the following tools and techniques. They must track several different metrics to derive a complete understanding of model performance, including accuracy, precision, and recall. An essential technique is validation splits, which improve unbiased evaluations. Also, TensorBoard is available to developers to visualize training progress and intuitively understand model performance. With these evaluation results, developers are better able to fine-tune their architectures.

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense

# Define a Sequential model

model = Sequential([

# Input layer

Dense(units=128, activation='relu', input_shape=(100,)),

# Hidden layer

Dense(units=64, activation='relu'),

# Output layer

Dense(units=10, activation='softmax')

])

# Compile the model with evaluation metrics

model.compile(

optimizer='adam',

loss='categorical_crossentropy',

metrics=['accuracy', 'precision', 'recall']

)

# Display the model's architecture

model.summary()

# Train the model

history = model.fit(

x_train, y_train,

epochs=10,

validation_data=(x_val, y_val)

)

# Evaluate the model

evaluation = model.evaluate(x_test, y_test)

# Print evaluation metrics

print("Test Accuracy:", evaluation[1])

print("Test Precision:", evaluation[2])

print("Test Recall:", evaluation[3])

3. Advanced Training Techniques for Building and Training TensorFlow Models

Once developers have mastered the basics of building and training models, they can consider advanced methods and techniques. Developers can achieve better model generalization and robustness by following best practices for training TensorFlow neural networks, such as implementing early stopping, dropout layers, and transfer learning. They can learn how to apply early stopping to prevent model overfitting. Meanwhile, they can also use dropout layers to generalize the model better. Another helpful technique is leveraging transfer learning for better generalization. It is also vital to consider testing models on unseen datasets to improve robustness. Please refer to advanced TensorFlow techniques and harnessing TensorFlow for a more in-depth discussion about advanced techniques and harnessing TensorFlow.

4. Common Pitfalls in Training and Evaluating TensorFlow Models

As mentioned several times, although TensorFlow models have many advantages, there are still many challenges. Developers should adhere to best practices for training TensorFlow neural networks to avoid common pitfalls. These include proper data preprocessing, hyperparameter tuning, and using appropriate evaluation metrics to ensure models perform effectively on unseen data. Training data quality is paramount when training models and adequate data preprocessing is crucial to prevent poor model performance. There is also the problem of the model memorizing training data instead of generalizing it, which leads to overfitting. Developers must also select evaluation metrics wisely to ensure model success is correctly represented. Furthermore, developers must not ignore hyperparameter tuning. Otherwise, this can result in suboptimal performance.

Conclusion

The TensorFlow framework is a powerful toolbox for building diverse neural networks that extract insights from big data. Keras complements this framework, which simplifies the creation of Sequential models. Two common architectures that address a wide range of big data problems include CNNs and RNNs. CNNs, with their deep architectures, apply to image-related tasks. Meanwhile, RNNs are better suited to sequential data when used in their advanced memory units.

However, developers must still apply good practices in training and evaluating models to optimize them for real-world applications. However, understanding best practices for training TensorFlow neural networks is key to fully leveraging the potential of this robust framework. Addressing the common challenges in building TensorFlow models is essential for developers to create strong and practical solutions. Implementing best practices and leveraging advanced tools can mitigate these challenges effectively. With this guide on how to build TensorFlow models for beginners, developers can kickstart their journey into deep learning with TensorFlow and Keras.