Proliferation of Big Data

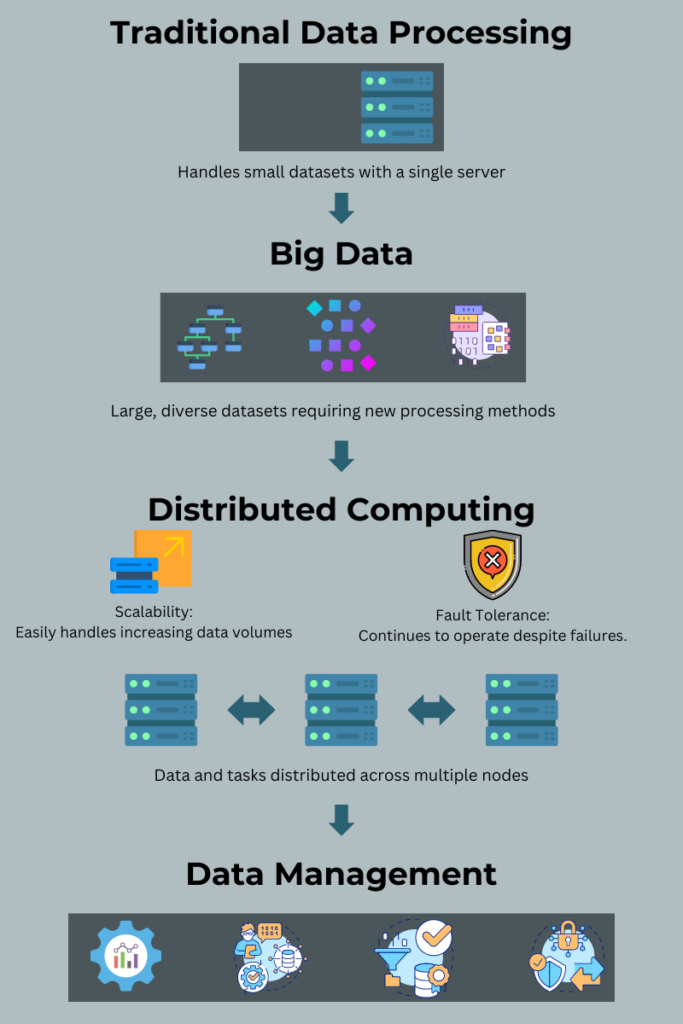

In an era where the internet has woven itself into every fabric of our lives, the sheer volume of data generated daily is staggering. Traditional data processing applications buckle under the weight of these vast, diverse datasets, paving the way for a revolutionary field: big data infrastructure. This new frontier needs to handle the speed and complexity of modern data, creating robust systems capable of storing, processing, and analyzing information on a colossal scale. Join us as we delve into the magic of big data infrastructure and uncover the technologies that make it all possible.

Core Requirements for Big Data Infrastructure

The core requirement for such infrastructure is the timely storage, processing, and analysis of massive volumes. At the same time, it needs to be scalable and flexible to handle increasing data volumes and diverse data structures. An infrastructure must have distributed computing and storage systems that manage data across multiple nodes or servers. Other considerations include security, fault tolerance, and data management techniques. The exponential increase in computer hardware processing power has made distributed computing possible. This also has made distributed storage possible by distributing data across clusters of interconnected nodes. Distributed storage enables scalability, fault tolerance, and high availability.

Challenges and Solutions in Big Data Infrastructure

Traditional applications cannot handle large and diverse datasets, even when they run on distributed computing and storage systems. Such applications must be able to distribute tasks and data across multiple computing nodes and their associated distributed memory. Furthermore, this requires the development of software frameworks that provide the components that applications need to effectively distribute tasks and data across multiple computing and storage nodes. We need new storage methodologies from traditional relational database systems to handle data in varied formats due to the need for distributed storage.

Setting up distributed computing and storage systems is time-consuming and wasteful when not in use. Automated setup and teardown of these systems, along with the ability to pay only for them when they are in use, will broaden the economic appeal of such infrastructure.

Distributed Computing: The Backbone of Big Data Processing

Evolution of Parallel Processing in Big Data Infrastructure

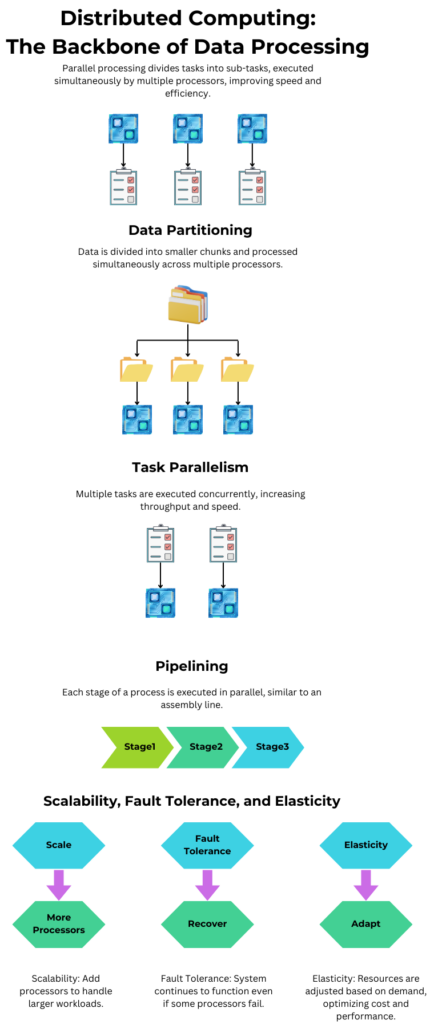

The field of parallel processing has been an area of research throughout the history of electronic computing to subdivide a computing task across multiple processors, thereby improving the processing speed ideally by a factor proportional to the number of processors. A new take on many hands makes light work. The introduction of modern microprocessors and the exponential increase in their processing power accelerated the development of parallel processing and its introduction to mainstream computing. Two developments have augmented parallel processing capability—the ability to fabricate multiple processing cores on microprocessors and parallel processing algorithms sophistication continually increases.

Benefits of Parallel Processing in Big Data

Parallel processing improves computational speed by dividing a task into sub-tasks and executing these sub-tasks simultaneously or in parallel. However, coordinating these tasks is necessary to ensure proper synchronization and consistent results. This coordination overhead can sometimes limit the speedup benefits of parallel processing. Multiple processors enable fault tolerance; if one or more processors fail, the remaining processors are reconfigured to ensure processing continuity. Scalability is also possible where additional processors can be added whenever processing workload or data volume increases.

Algorithms responsible for coordinating multiple processors are designed to be flexible, which is another benefit of parallel processing. Flexibility covers the dynamic readjustment of resources based on workload requirements and business needs. This also enables resource efficiency to optimize performance and reduce idle time.

Scalability is a crucial benefit of parallel processing, allowing the handling of more concurrent tasks and larger datasets. However, linear scaling is often impossible due to coordination overheads and dependencies between subtasks. Elasticity is a relate Elasticity is a related concept where resources can be scaled up or down based on demand, introducing cost efficiency.

Key Parallel Processing Strategies for Big Data Infrastructure

Two main categories of parallel processing are optimal for different classes of tasks: data partitioning and task parallelism. In data partitioning, data is divided into smaller partitions for chunks and distributed across multiple processors for simultaneous execution. In task parallelism, multiple tasks are executed concurrently, speeding execution and throughput. Another popular configuration is pipelining, where each stage of a computing operation is executed in parallel.

Storage Systems for Big Data

Requirements for Big Data Storage

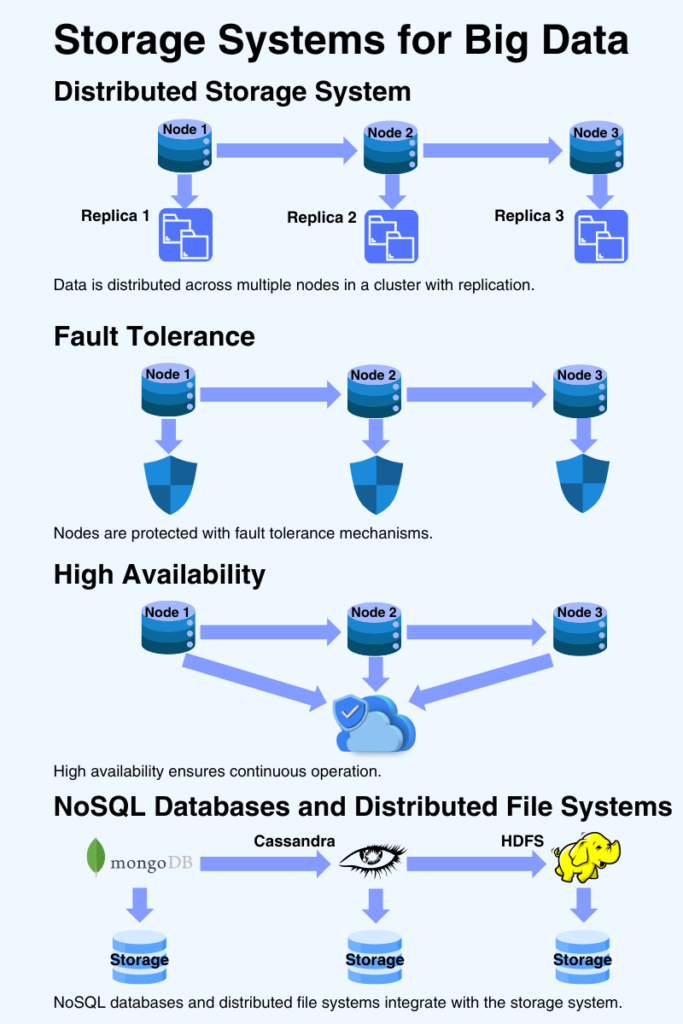

Storage system capabilities must be commensurate with big data processing needs. There are several requirements for such storage systems. Data reliability is secure data storage and retrieval without loss or corruption. These storage systems also need the ability to scale seamlessly. Data storage must accommodate growing volumes of data as data processing needs grow. The performance of storage systems is essential since they must provide timely access to their data. This makes retrieval efficiency crucial to supporting big data processing. Data security is critical to prevent unauthorized access, tampering, or corruption. Another essential consideration is disaster recovery, which includes robust backup and data replication strategies. It is also necessary to consider cost-effectiveness so any big data solution falls within an organization’s budget.

Distributed File Systems for Big Data Storage

Configuring storage systems for big data as distributed file systems addresses these requirements. Distributing data across multiple nodes or servers in a cluster will improve data access speeds and reliability. Also, it is vital to replicate data across multiple nodes since redundancy will address fault tolerance and prevent data loss due to node failure. This also addresses the need for high availability, where requests are dynamically routed to healthy nodes. Distributed data systems also lend themselves to being scalable, where more nodes can be added to the cluster to accommodate increasing data volumes and concurrent access requests. The Hadoop Distributed File System (HDFS) is an open-source distributed file system that provides these capabilities through commodity hardware.

NoSQL Databases

NoSQL databases are another solution for big data storage systems and are made possible due to the decreasing costs of data storage hardware. A key advantage of NoSQL is that it supports diverse data models, including unstructured data, a crucial requirement for big data processing. NoSQL databases also provide distributed architectures, providing many features inherent in distributed file systems, allowing for scalability, fault tolerance, and high availability. NoSQL databases are also optimized for high performance, providing high throughput and low latency for data access and addressing speed requirements for big data processing. There are a variety of NoSQL technologies optimized for different data processing needs, with two examples being Mongo and Cassandra.

Popular Tools: Hadoop and Apache Spark

Apache Spark and the Hadoop ecosystem are two popular big data solutions.

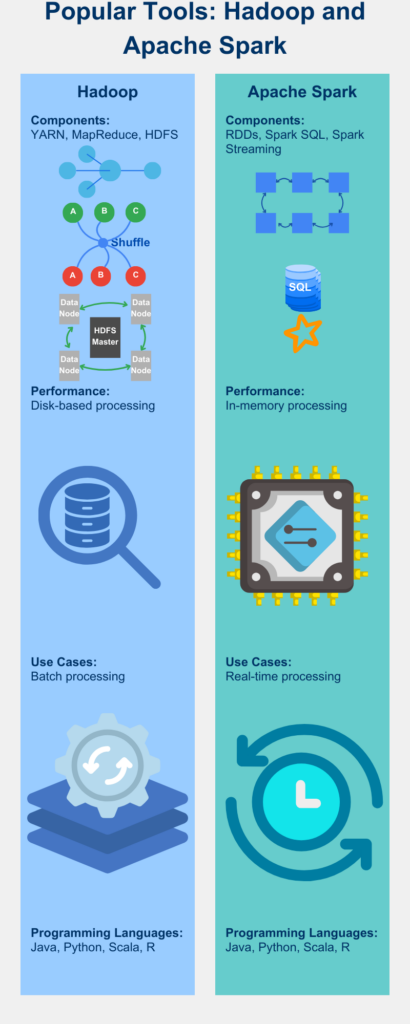

Hadoop Ecosystem

The Hadoop Ecosystem comprises Yet Another Resource Negotiator (YARN), Map Reduce, and HDFS. Using clusters of commodity hardware, HDFS is an open-source distributed file system that does away with the need for pricey hardware. Large datasets are stored using a distributed file system throughout these clusters to take advantage of parallel processing. Map Reduce is a software framework that facilitates parallel processing over numerous nodes and allows for the distributed processing of massive datasets. The two main steps of Map Reduce are Map and Reduce. Parallel processing achieves advantages through distributing both stages across several processing nodes. On a cluster, resource allocation and application execution management are handled by the scheduling and resource management framework YARN.

The components include scalability, fault tolerance, cost-effectiveness, data processing capabilities, and ecosystem support, and are meant to be interconnected and interoperable. With Hadoop, nodes can be added to the cluster to efficiently handle expanding data volumes and rising processing demands thanks to its horizontal scalability. Data replication and job recovery, which provide operational continuity and data recovery during a breakdown, are examples of fault tolerance. Commodity hardware allows for cost-effectiveness by doing away with the requirement for proprietary hardware, thereby cutting infrastructure expenses. Through the distributed processing of massive datasets, the Map Reduce framework offers batch processing for ETL (Extract, Transform, Load) and data analytics. A robust ecosystem of tools and frameworks makes Hadoop adaptable and enables the creation of unique big-data solutions.

Apache Spark

Apache Spark is a suitable framework for distributed computing because it processes large datasets across a cluster of processing nodes. It distributes data and computation across multiple nodes in a cluster, enabling parallel processing and scalability. Spark’s fundamental data structures are the Resilient Distributed Datasets (RDDs), which represent a distributed collection of objects that can be operated on in parallel. These are immutable and fault-tolerant, making them automatically recoverable by re-computing lost partitions due to failure. Spark performs in-memory processing, making it faster than disk-based processing systems where disk read-write is significantly reduced, improving performance. Spark supports multiple programming languages (including Scala, Java, Python, and R), extending its accessibility, and its frameworks support batch processing, real-time streaming (Spark Streaming), machine learning (MLib), and interactive SQL queries (Spark SQL).

Comparing Hadoop and Spark

Given its faster processing speeds and out-of-the-box real-time stream processing, select Apache Spark for real-time processing over Hadoop. Apache Spark’s programming model is more flexible and user-friendly, making development and maintenance easier. Hadoop is more robust and stable when handling large-scale batch-processing tasks. Therefore, select Hadoop for ETL, data warehousing, and mission-critical batch processing.

Scalability and Reliability of Big Data Technologies

In big data processing, scalability and reliability are two critical areas of research. Since data is continually growing, existing big data processing systems must accommodate constantly increasing data volumes.

Comparing Hadoop and Spark

Given the parallel nature of big data processing systems, a common way to address this is through horizontal scalability. Horizontal scaling adds more nodes to distribute workloads. Associated with horizontal scalability is elasticity. Elasticity dynamically scales resources up or down to handle peak loads but reduces waste through unused resources. The advent of cloud computing provides organizations with the ability to introduce elasticity in a cost-efficient manner quickly. Vertical scaling also has a role in big data processing. Vertical scaling adds resources to each node. In some cases, this is a more appropriate solution than horizontal scaling.

Ensuring Reliability

Reliability is critical for data integrity and business continuity, impacting regulatory compliance, credibility, and reputation. Several strategies address reliability, including data replication, fault tolerance mechanisms, monitoring and alerting, and disaster recovery planning.

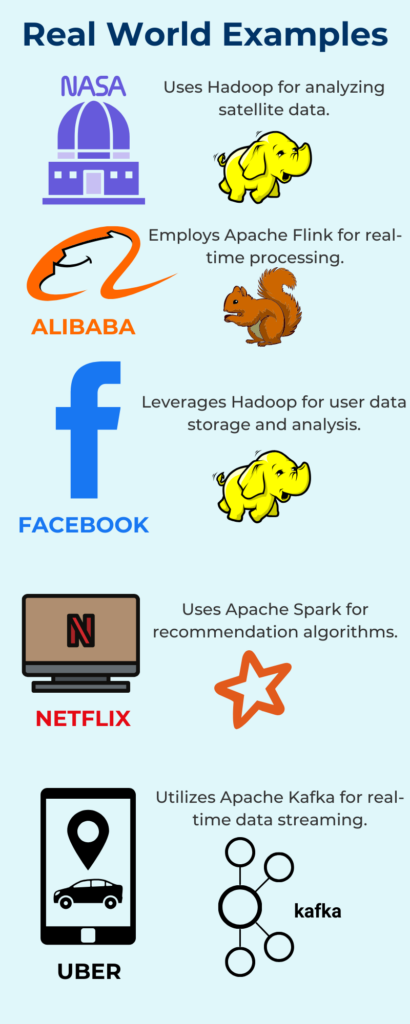

Real World Examples

Several well-known companies use big data processing, including NASA, Alibaba, Facebook, Netflix, and Uber.

Netflix and Apache Spark

In order to handle massive amounts of streaming data, Netflix makes use of Apache Spark in addition to Apache Hadoop.

Facebook and Hadoop

Facebook uses Hadoop and other technologies to manage billions of user interactions every day and support its online features for users.

Uber and Real-Time Streaming

Uber employs Apache Kafka for real-time streaming and Hadoop for batch processing to meet its needs for both batch and real-time streaming.

NASA’s Space Missions

To enable its space missions, NASA must process, analyze, and leverage big data processing technology.

Alibaba’s Transactional Data

Alibaba leverages big data technologies in order to process enormous volumes of transactional data to provide services to both itself and online users.

Further Reading

Designing Data-Intensive Applications

Author: Martin Kleppmann

Description: A deep dive into the principles and architecture behind modern data systems. This book covers distributed systems, databases, and data pipelines, providing essential knowledge for building reliable, scalable applications.

Big Data: Principles and best practices of scalable real-time data systems

Authors: Nathan Marz, James Warren

Description: Introduces the Lambda Architecture for building scalable, fault-tolerant big data systems, focusing on real-time and batch processing.

The Data Warehouse Toolkit: The Definitive Guide to Dimensional Modeling

Authors: Ralph Kimball, Margy Ross

Description: A comprehensive guide on data warehousing and dimensional modeling techniques, essential for effective data storage and retrieval.

Streaming Systems: The What, Where, When, and How of Large-Scale Data Processing

Authors: Tyler Akidau, Slava Chernyak, Reuven Lax

Description: A foundational resource on stream processing, covering real-time data pipelines and large-scale distributed systems.

Cloud Computing: Concepts, Technology & Architecture

Authors: Thomas Erl, Zaigham Mahmood, Ricardo Puttini

Description: Comprehensive exploration of cloud computing architecture, essential for understanding modern big data infrastructure.