Introduction

Understanding Big Data

Understanding Big Data involves managing and analyzing large datasets to extract valuable insights that can be applied in various beneficial ways. Big Data Challenges and Opportunities offer insights like patterns, trends, connections, and anomalies.

Big Data Challenges and Opportunities in Use Cases

Understanding consumer preferences, making tailored suggestions, forecasting disease outbreaks, detecting fraud, and optimizing manufacturing are typical big data challenges and opportunities. Businesses find various big data use cases crucial to preserving their competitive edge.

Addressing Big Data Challenges and Opportunities

However, as with all technologies, handling big data presents significant challenges. Some of the more critical big data challenges and opportunities include security, privacy, and data quality. Security encompasses the need to protect against data breaches and unauthorized access. Closely related to security is privacy, where personal data could be made public, carrying legal repercussions. Data quality ensures that data is not altered to misrepresent the information conveyed.

Scope of This Article

This article investigates these challenges and considers how businesses can leverage big data challenges and opportunities to gain advantages. Big data users must be aware of these challenges due to the risk of adverse consequences they are exposed to. Helpful guidelines are provided so that users can develop effective solutions to these challenges and proactively address them.

Ethical Considerations and Collaboration

There are many ethical considerations associated with big data, and its users need to handle big data challenges and opportunities responsibly to avoid loss of reputation. Given the size of these challenges, collaboration between stakeholders is also necessary to address them and develop innovative solutions.

Leveraging Big Data Challenges and Opportunities for Benefits and Solutions

Big data has many potential benefits; therefore, exploring innovative solutions to address big data challenges and opportunities is important. Recent innovations in artificial intelligence and machine learning have the potential to address these challenges and provide actionable insights. Management practices and robust data governance frameworks are necessary to ensure data quality, security, and compliance. Technical and organizational cybersecurity measures are essential in protecting against data breaches and cyber threats. Similarly, privacy-enhancing technologies and practices are necessary for privacy to protect individual privacy and comply with regulations.

Discussion Scope

This article can only present a very high-level discussion of these challenges since they each demand an in-depth study of their nature and the solutions involved in addressing them. The discussion is limited to business contexts, not the in-depth technical and academic discussions associated with these challenges. Readers are encouraged to further research these challenges and their solutions to assist whatever roles they may have in this field.

Security Challenges and Opportunities

Data Breaches

Data breaches are the main consideration around big data security, where sensitive information is exposed or manipulated, representing significant big data challenges and opportunities. This information can include personal details, financial data, or intellectual property. It is imperative that organizations actively pursue strategies to prevent data breaches and monitor and take remedial actions whenever they occur. Organizations must conduct a vulnerability assessment of data assets and potential entry points for cyber attacks. The need for penetration testing is associated with this, where testers attempt to breach data protection to identify vulnerabilities. Remediation includes incident response planning that details the plans to minimize the impact of any data breaches and facilitate self-recovery. It is essential to enforce encryption of protected data at rest and in transit, preventing unauthorized access even when a breach occurs.

Holistic Data Protection

Data protection must be a holistic approach encompassing technical and organizational safeguards, addressing big data challenges and opportunities comprehensively. Additionally, postmortems should be conducted upon any breaches to strengthen these safeguards further. User credentials alone are insufficient to prevent unauthorized access, and multi-factor authentication is an essential layer of security where users provide multiple forms of verification. Segmentation of networks is an essential safeguard component that limits the spread of cyber attacks and contains potential breaches.

Employee Training and Security Culture

Training is an essential organizational safeguard, allowing employees to recognize and report suspicious activities, including phishing, a common cyber attack. Alongside training, it is necessary to promote a proactive security culture where best practices are continually communicated and reinforced. This includes encouraging and enforcing secure work practices that include using virtual private networks (VPNs), communication encryption, and securing home Wi-Fi networks in remote work settings.

IT Network Monitoring

It is essential to monitor the IT network perimeter, where endpoint devices are continuously monitored for signs of malicious activities and prompt response to threats. Monitoring the network is also essential to detect and respond to cyber threats in real-time to ensure timely mitigation actions. Anomaly detection is an essential network monitoring component that identifies unusual patterns or behaviors in network traffic, indicating security breaches or malicious activities. Monitoring software vulnerabilities is essential to promptly applying security patches and reducing the risk of exploiting these vulnerabilities. Security Information and Event Management (SIEM) tools complement network monitoring by analyzing security logs and events to identify potential security incidents and vulnerabilities.

Collaborative Security Efforts

Collaboration with the wider IT community is required since no individual organization has the resources to respond to a rapidly changing field of data security on its own.

Privacy Concerns and Strategies for Big Data Challenges and Opportunities

Handling Personal Information

Numerous people’s personal information can be found in large datasets, which may violate their rights. Organizations must handle and use this data responsibly and implement data practices to reduce the risk. Organizations must seek individuals’ express consent and inform them of the purpose and extent of data usage to protect personal data, beginning with the data-gathering stage. In several well-publicized instances, organizations have utilized personal data without the owners’ knowledge or consent. Businesses need to be aware of the improper use of personal information, which includes intrusive surveillance, targeted advertising, and profiling. Unauthorized access to that data puts people at risk for negative outcomes, such as identity theft, financial fraud, and reputational harm, as was previously discussed. Organizations that practice good stewardship of personal data will earn the respect of consumers.

Legal and Regulatory Frameworks

Many jurisdictions have responded to data privacy issues by placing legal and regulatory requirements upon organizations and their handling of personal data. Failure to comply exposes organizations to fines, legal liabilities, and reputational damage. Two prominent examples of data privacy regulatory frameworks include the California Consumer Privacy Act and the European Union General Data Protection Regulation (GDPR), given that many organizations operate in at least one of these jurisdictions, which makes them subject to these regulatory frameworks and the consequences of non-compliance. In addition to these, other jurisdictions are introducing regulatory frameworks that have a global reach, imposing serious consequences for organizations that are in breach of them, including hefty fines and operational restrictions.

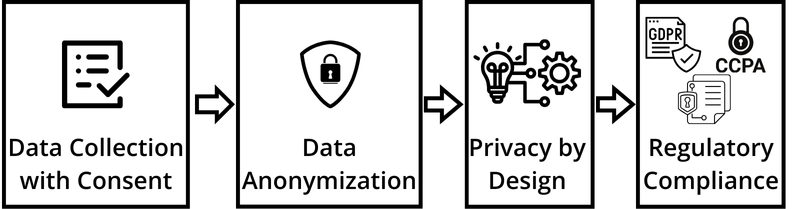

Legal and Regulatory Frameworks

Organizations have a suite of technical, process, and procedural solutions to address issues related to data privacy. In addition to security solutions discussed earlier, technical solutions include data anonymization techniques where it is difficult to trace personal data to individuals’ identities. A deeper approach is privacy by design, where privacy requirements are considered alongside other functional and non-functional requirements in the design and architecture of data processing systems. This should commence with a Privacy Impact Assessment (PIA) that assesses the potential impact of data processing activities on individual privacy rights to enable identification and mitigation during the development lifecycle. A key component of privacy by design is data minimization, where personal data is only collected for specific, explicit, and legitimate purposes.

Processes and Procedures for Privacy

Important components of processes and procedures include controlling access to personal data through mechanisms like multi-factor authentication and user permissions and data retention policies that specify the duration of data storage.

Data Quality Issues and Solutions

Importance of Data Quality

Big data processing aims to extract meaningful information that organizations can use to improve the outcome of their activities. However, this is highly dependent on the integrity of the collected data; collected data must be accurate, consistent, and reliable. Inaccurate, incomplete, and duplicate data will invariably lead to flawed analysis and erroneous decisions or actions, resulting in catastrophic consequences in many scenarios, including financial loss and loss of life or lives. One example is faulty product design based on bad data, which leads to safety risks. Accurate data is essential for organizations to build trust and credibility with stakeholders. Therefore, organizations must draft and implement strategies for error identification and remediation.

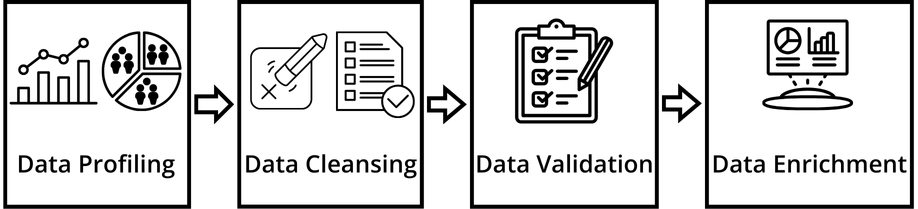

Error Identification and Remediation

Strategies that identify data inaccuracies and inconsistencies include data profiling, outlier detection, and trend analysis. Remediation strategies for inaccurate and inconsistent data include data cleansing, enrichment, deduplication (identification and removal of duplicate records from datasets), and validation processes that improve the quality and reliability of data.

Data cleansing techniques, such as validation rules (including format, range, and cross-field validation for data accuracy and completeness), standardization (ensuring consistency across datasets), and error correction (outlier detection, removal, and imputation methods like mean substitution or regression), are often prerequisite steps for these remediation strategies.

Automation of Data Quality Techniques

The size and magnitude of datasets necessitate automating these strategies and techniques. Furthermore, data cleansing algorithms like fuzzy matching and pattern recognition can automate data cleaning and standardization tasks, significantly improving efficiency. Data enrichment techniques, which include data augmentation and integration with external sources, can enhance the completeness and accuracy of datasets. However, any data quality solution must involve humans, and automated data quality tools must monitor and alert human operators of data quality issues in real time. This enables users to perform proactive remediation and prevent downstream impacts.

Role of Organizational Policies

Organizational policies and processes also play an essential role in data quality assurance, and adopting a data stewardship culture is necessary. Organization policies must define roles, responsibilities, and procedures for managing data quality throughout its lifecycle. Data quality measurements include accuracy, completeness, consistency, timeliness, and reliability. These measurements are needed for effective data quality assurance. These policies must implement the abovementioned strategies to make data quality effective and implement continuous improvement mechanisms for refining these policies and procedures over time.

Leveraging Big Data Responsibly

Responsibility and Consequences

Organizations that use big data assume responsibility for handling it to mitigate severe consequences from its mishandling. Organizations are responsible for safeguarding data security, privacy, and quality.

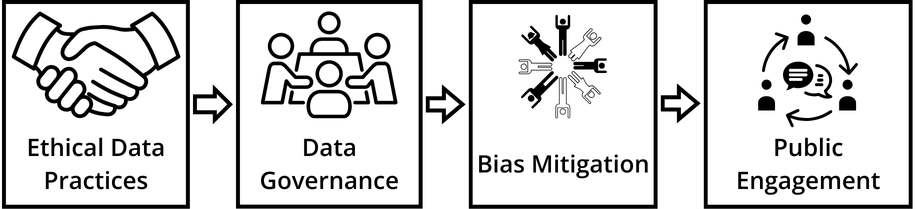

Ethical Practices and Ecosphere

Organizations must adopt ethical practices in data handling to preserve trust and guard their reputation. These organizations operate in an ecosphere and with other entities, including customers, partners, and the public, and unethical data mishandling will damage their relationship with these entities. Organizations with one or more brands as key assets must be especially mindful of the negative consequences that data mishandling may have on their brands. A growing body of legal and regulatory requirements also imposes severe legal and regulatory consequences on data mishandling. Operating in an ecosphere implies social responsibility since it is in all members’ interest to maintain that ecosphere’s health.

Bias and Diversity

A growing discussion in many big data use cases is bias and diversity, where certain groups are disadvantaged due to biased handling or bias implicit in the data. Therefore, organizations must implement bias mitigation processes and practices and be transparent about bias mitigation.

Data Governance and Risk Management

Organizations must support policies, practices, and committees centered around data governance to ensure proper data handling. Data governance committees are responsible for developing data handling policies and practices and should comprise representatives from all the organization’s functions. These committees are empowered to make the necessary decisions for resolving data-related issues and report regularly to senior management and stakeholders to ensure accountability and alignment with organizational objectives.

Data governance policies must specify risk management, including assessing and mitigating risks associated with data handling. These risks include security vulnerabilities, privacy violations, poor data quality, and societal impacts. An important activity in implementing these policies is impact assessment, which identifies the risks associated with an organization’s data and its handling. Policies also must define the mitigation strategies associated with these risks to mitigate their negative impacts. Another core component is continuous education and training of the organization’s members in sound data handling and fostering an environment and culture of proper data handling.

Continuous Improvement and Public Engagement

Continuous improvement is also essential so policies are updated upon uncovering any inadequacies and adapting to a changing environment. Finally, public engagement must be sought, given the impact on stakeholders and the need for the organization to maintain a positive relationship with these stakeholders.

Glossary

Penetration Testing

Simulating a cyber attack to identify weaknesses in a system’s defenses. Imagine trying to pick the lock on your house to see how easy it is for a burglar to break in.

Multi-Factor Authentication (MFA)

Requiring multiple forms of verification to access a system, like a password and a fingerprint scan. It’s like needing both a key and a security code to unlock your phone.

Anomaly Detection

Finding unusual patterns in data that could indicate a problem. Imagine noticing a sudden spike in your electricity bill, which might be a sign of a malfunctioning appliance.

SIEM (Security Information and Event Management)

Tools that collect and analyze security information from various sources to identify potential security incidents. Think of it as a central security dashboard that monitors all your security cameras and alarms.

Data Anonymization

Removing personally identifiable information (PII) from data sets. This is like taking a picture of a crowd and blurring out everyone’s faces.

Privacy by Design (PbD)

Building privacy considerations into data processing systems from the start. Imagine designing a house with strong locks and security cameras from the beginning, instead of adding them later.

Data Profiling

Analyzing data to identify patterns and trends. This is like looking at your bank statements to see where you spend most of your money.

Outlier Detection

Finding data points that fall outside the expected range for a set. Imagine noticing a temperature reading of 120 degrees Fahrenheit in December, which would likely be an outlier.

Data Cleansing

Identifying and correcting errors in data sets. This is like cleaning up a messy room to make it easier to find things.

- Validation Rules: Defined criteria used to check data accuracy and completeness. Imagine having a checklist to ensure all the information on a form is filled out correctly.

- Standardization: Ensuring consistency in the format of data across different sets. This is like making sure everyone in your office uses the same format for writing dates (e.g., MM/DD/YYYY).

- Deduplication: Identifying and removing duplicate records from a data set. Imagine cleaning out your closet and getting rid of clothes you have multiples of.

Data Governance

The set of policies, processes, and procedures for managing an organization’s data. Think of it as the rules and guidelines for how data is handled within a company.Data Stewardship: The responsibility of individuals or teams to ensure the quality, security, and privacy of data. This is like assigning someone to be in charge of keeping the company kitchen clean and organized.