1. Introduction

Advanced SageMaker Deployment Techniques enable AWS SageMaker to host ML models for efficient prediction and pattern recognition. Machine learning engineers use Amazon SageMaker, a fully managed AWS service, to build, train, and deploy ML models. It integrates with other AWS services and leverages them for efficiency and cost-effectiveness.

ML model deployment is as critical as training since real-world operations depend upon their timeliness and associated costs. Several non-functional requirements are of primary importance, such as scalability, performance, and cost efficiency. It is necessary to apply advanced SageMaker deployment techniques to achieve these non-functional requirements.

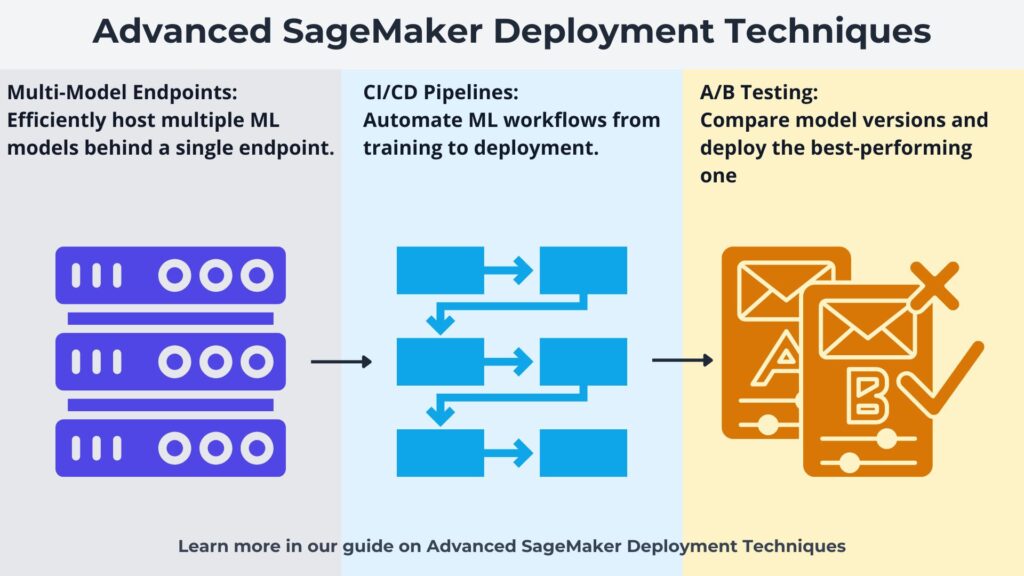

This article covers three core advanced SageMaker deployment techniques. These include Multi-Model Endpoints, SageMaker Pipelines for CI/CD, and A/B Testing for Model Optimization. Multi-Model Endpoints help with cost saving and reducing model complexity. SageMaker Pipelines for CI/CD allow rapid model updates and testing. A/B Testing for Model Optimization reduces risk when introducing a new model.

2. Advanced SageMaker Deployment Techniques

2.1 Multi-Model Endpoints for Cost Efficiency in Advanced SageMaker Deployment Techniques

One of the advanced SageMaker deployment techniques mentioned is to deploy multiple models behind a single SageMaker endpoint. This works best when two or more models require infrequent access. Examples include personalized recommendation systems, customer segmentation, healthcare diagnostics across specialties, and financial risk assessment. All of these models are numerous but infrequently accessed, having similar structures but trained in different domains. Other examples include predictive maintenance for diverse equipment and sentiment analysis across industries. The other ideal characteristic is that they are small to medium in size to ensure fast loading from storage to memory.

Multi-model endpoints dynamically load models into memory when needed. This reduces endpoint infrastructure by having two or more models sharing the same endpoint. It leverages the AWS infrastructure by storing all trained models in an AWS S3 bucket. Therefore, models do not consume memory when SageMaker is not using them, thereby reducing memory consumption. When a user makes a prediction request for a specific model, SageMaker will load it into memory. This eliminates the need for multiple dedicated endpoints since a single SageMaker endpoint serves multiple models.

Whenever models require more frequent access, then SageMaker caches them in memory. By eliminating load time, SageMaker can perform faster subsequent predictions. Through this technique, SageMaker can dynamically allocate memory and compute resources based on real-time demand. SageMaker can also configure the endpoint to auto-scale based on traffic patterns and model usage frequency.

Users specify the model identifier in the request payload when making inference requests to the endpoint. The endpoint, therefore, knows which model to load and run.

SageMaker and the endpoints integrate with AWS Lambda, allowing Lambda functions to dynamically orchestrate model selection based on business logic.

Whenever ML engineers apply this technique, they realize significant cost savings and reduced management complexity.

2.2 SageMaker Pipelines for CI/CD in ML Workflows

Automating end-to-end ML workflows is another of the advanced SageMaker deployment techniques that include building, training, and deploying models. SageMaker Pipelines support continuous integration and continuous delivery that robustly automates ML workflows. The key benefit is that ML engineers can perform rapid model updates and testing.

Another advantage of SageMaker is its seamless integration with AWS Services, which allows it to perform CI/CD through the Pipelines component. It integrates with tools like GitHub and AWS CodeCommit that perform source code version management, allowing for model versioning. To automate training jobs, it integrates with AWS CodeBuild, which enables it to perform automated building and training of models. For deployment, it uses SageMaker Pipelines, which streamlines model deployment.

2.2.1 Source Code Triggering

A simple scenario illustrates how CI/CD works for ML models. GitHub or AWS CodeCommit manages the source code specifying the model. An ML developer checks in code to the source code version control that automatically triggers pipeline execution.

2.2.2 Model Building and Training using AWS CodeBuild

The AWS CodePipeline connects the source control trigger to AWS CodeBuild, which builds and trains the model. For a comprehensive understanding of building and training models, refer to our Beginner’s Guide on TensorFlow Models. It first fetches the ML training scripts from the source control along with any associated data stored in S3. Next, it compiles the source code and prepares the environment for training. It also sets up dependencies for the ML model.

Often, SageMaker training jobs are containerized using tools like Docker. Upon compilation, AWS CodeBuild builds the Docker images for ML model training and pushes them to a container registry. Often, this is the Amazon Elastic Container Registry (ECR). SageMaker can then load these images to run training jobs with optimized dependencies.

Once AWS CodeBuild has completed building the code and images, it triggers a SageMaker training job using the API. Upon training completion, AWS CodeBuild stores the model in S3.

Next, it will run unit tests for data preprocessing scripts and model evaluation metrics. AWS CodeBuild uses a buildspec.yml file that defines the test cases that validate training scripts before deployment.

Once testing is complete, AWS CodeBuild triggers the SageMaker model deployment process.

2.2.3 Model Deployment using SageMaker Pipelines

Upon triggering by AWS CodeBuild, SageMaker Pipelines deploy the model stored in S3 to a SageMaker endpoint. There, the endpoint executes the model upon receiving inference requests from clients. To further explore building pipelines, please refer to SageMaker Pipeline Workshop: Build, Automate, and Optimize ML Workflows.

2.2.5 Best Practices of Advanced SageMaker Deployment Techniques

SageMaker’s integration with AWS Services allows it to utilize services to follow best practices. It can use Amazon CloudWatch to monitor pipeline execution. Also, it can use AWS CodePipeline and AWS SageMaker Pipelines to rollback any failed deployments.

2.3 A/B Testing for Model Performance Optimization

No amount of testing can ever guarantee the success of how new code or a new ML model will perform in the real world. This happens for many reasons, the most common being the unpredictability of user interactions with these models. Another common reason is trying to predict whether users will like it. The most infamous example of this was New Coke.

A/B testing is one of the core advanced SageMaker deployment techniques that addresses this uncertainty. It achieves this by rolling back to the earlier model whenever the new model fails to meet expectations in some way. This can be due to lower accuracy, degraded performance, or failing to meet user expectations.

Its mode of operation is that it has both the current and new models deployed simultaneously. This allows it to safely evaluate the new model’s performance under real-world conditions. If its performance is inferior to the current model, then A/B testing reverts back to the current model. This reduces the risk associated with new models.

A/B testing achieves this through multiple SageMaker endpoints, where it routes incoming traffic across these endpoints. Each endpoint operates a different version of the model; one is the current model, and the other is the new model under evaluation. To evaluate the new model, it measures key performance indicators (KPIs), including accuracy, latency, and user engagement. This allows data-driven decision-making when choosing the best-performing model since it is based on real-time data.

To route incoming traffic across the SageMaker endpoints, A/B testing uses SageMaker Endpoint Configuration to control traffic distribution. It also leverages SageMaker’s seamless integration with AWS Services by using Amazon CloudWatch metrics to analyze results.

A/B testing allows for gradual rollouts and controlled experiments. This helps to ensure critical applications are not compromised by poor model performance.

3. Scaling & Cost Optimization: A Quick Overview

See our Ultimate Guide on Apache Spark for Big Data Analytics to explore large-scale data processing techniques that enhance model performance. Advanced SageMaker deployment techniques include leveraging the AWS cloud computing environment for autoscaling and cost optimization. The Sagemaker endpoints integrate with AWS Auto Scaling to scale the underlying processors. This scaling is based on the demands of the deployment jobs and the traffic patterns. It configures AWS Auto Scaling regarding the maximum number of processors allowed, and the minimum number required. Also, it specifies the scaling strategies and the CPU loads for scale out and scale in.

These techniques also include using spot instances that can save up to ninety percent of processing costs. Engineers design computational jobs that quickly recover whenever AWS terminates a spot instance. Whenever there are no strict real-time requirements for ML jobs, they should use spot instances.

Each core technique mentioned above can incorporate scaling and cost optimization into their operations. Multi-Model Endpoints can apply autoscaling to the different models it runs and have them running on spot instances. SageMaker Pipelines can configure SageMaker Endpoints to set up AWS Auto Scaling and configure spot instances for processing. Finally, A/B testing can evaluate model performance through AWS Auto Scaling and deployment to spot instances.

4. Final Thoughts on Advanced SageMaker Deployment Techniques

This article surveyed three main advanced techniques involved with deploying ML models to production within AWS Sagemaker. Cost efficiency remains a top priority for ML engineers, making it essential to explore strategies for reducing expenses. Whenever clients use a set of similar models intermittently, then using Multi-Model Endpoints will improve cost efficiency.

CI/CD has established itself as a best practice for software development, eliminating manual effort in the software development lifecycle. ML best practices can extend this to the ML development lifecycle, also eliminating manual effort.

There is always risk associated with deploying an upgraded model, and A/B Testing helps to reduce this risk. ML Engineers can quickly revert to the current model whenever there are serious issues with the upgraded model.

ML engineers should actively experiment with these techniques and integrate them into their ML workflows for optimized performance. They should also explore AWS documentation to gain a deeper understanding of AWS SageMaker for ML.

Further Reading: Recommended Books on SageMaker & ML Deployment

For those looking to dive deeper into AWS SageMaker, machine learning deployment, and MLOps best practices, here are some recommended books:

Affiliate Disclaimer

As an Amazon Associate, AI Cloud Data Pulse earns from qualifying purchases. This helps support our content at no extra cost to you.

Learn Amazon SageMaker – Second Edition, by Julien Simon

Machine Learning with Amazon SageMaker Cookbook, by Joshua Arvin Lat

Machine Learning in the AWS Cloud: Add Intelligence to Applications with Amazon SageMaker and Amazon Rekognition, by Abhishek Mishra

Pragmatic Machine Learning with Python: Learn How to Deploy Machine Learning Models in Production, Avishek Nag

Keras to Kubernetes: The Journey of a Machine Learning Model to Production, by Dattaraj Rao

Explore these books to deepen your understanding of SageMaker and ML deployment. Click the links to check reviews, pricing, and availability.

References

For further details on AWS SageMaker and advanced deployment techniques, refer to the following official AWS documentation and related resources:

- AWS SageMaker Multi-Model Endpoints – Learn how to host multiple models behind a single SageMaker endpoint for cost efficiency.

- AWS SageMaker Pipelines – Understand how to automate ML workflows using SageMaker Pipelines.

- AWS SageMaker Batch Transform – Learn about batch inference for large-scale ML model predictions.