Introduction: The Dynamic Evolution of Big Data

Revolutionizing Decision-Making and Operational Efficiency

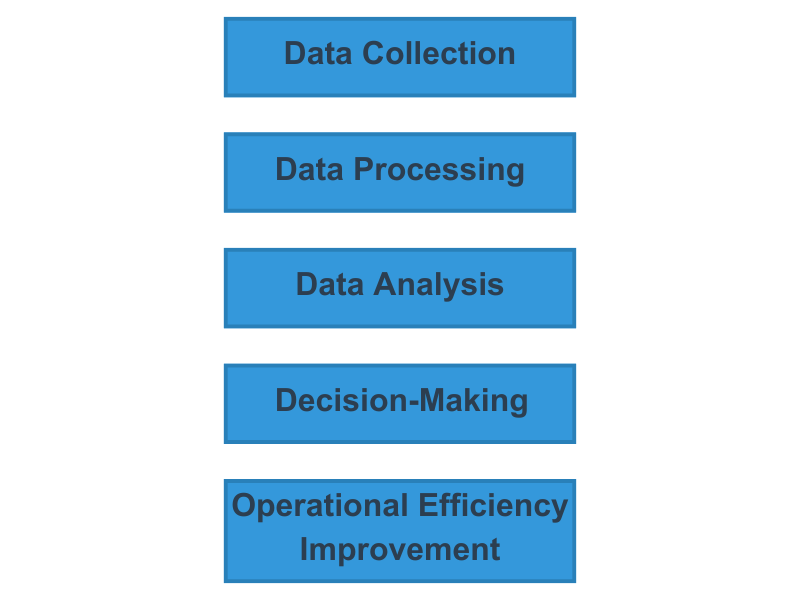

The revolutionary capability of big data processing has sparked numerous opportunities, highlighting the transformative trends in big data. Enabling enterprises to extract insights from vast troves of data. This transformative power of big data processing, one of the key transformative trends in big data, fuels evidence-based decision-making, fostering innovation, expansion, and strategic planning. It paves the way for creating new products and services, ensuring companies can thrive in the digital age. An example is that companies can develop personalized product recommendations based on consumer behavior. The data-driven process improvements it offers streamline procedures and identify and address inefficiencies and bottlenecks, enhancing operational productivity and efficiency.

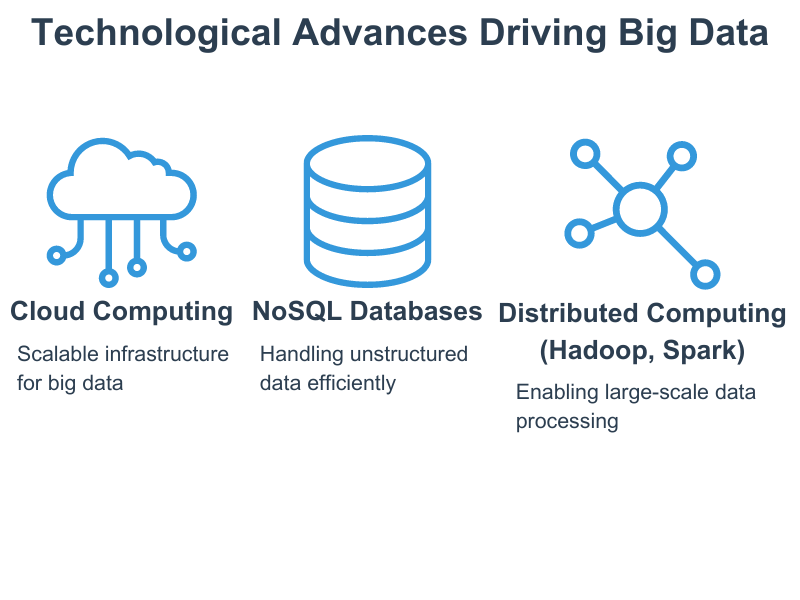

Technological Advances Driving Big Data

Digital technology’s relentless advance, especially in data storage and processing, powered big data’s growth, marking one of the transformative trends in big data. This progress in storage and processing hardware led to the development of distributed computing frameworks like Hadoop and Spark, providing a toolbox for organizations to build data processing applications easily. Cloud technology advances have further driven big data’s progress, where scalable infrastructure makes big data viable to a wider range of organizations. These advances necessitated data management solutions to support big data adoption, including NoSQL database and data warehousing. NoSQL databases allow the handling of unstructured data, whereas data warehousing enables data analytics. Introducing data integration and interoperability tools simplified data handling from heterogeneous sources, a big data core characteristic. These advances facilitate seamless data exchange across disparate systems and enable unified views of data across the organization.

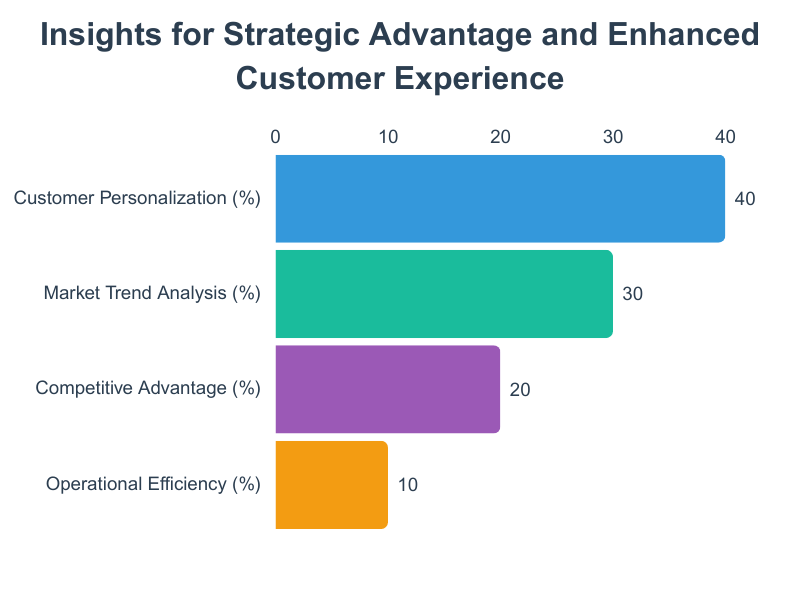

Insights for Strategic Advantage and Enhanced Customer Experience

Insights from big data are playing an ever-increasing role in better-informed strategic business decisions, competitive advantage, and customer experience enhancement. Making scenarios more data-driven improves strategic business decisions, resource allocation, investment strategies, and risk mitigation. Big data insights also allow a better understanding of market trends, customer preferences, and changes in consumer behavior. These understandings assist in guiding an organization in obtaining a competitive advantage. Big data insights allow organizations to personalize their products, services, and customer interactions. It also enables superior targeted marketing to improve customer engagement and loyalty.

Future Developments and Challenges in Big Data

Since big data is a dynamic field, it will continue to change due to several important developments. These include big data’s effects on numerous industries, machine learning, edge computing, real-time analytics, and quantum computing. As big data develops, its numerous advantages and disadvantages will become apparent. Big data has already demonstrated potential for social good and sustainable development initiatives, such as climate data processing. However, ethical and privacy concerns and the risk of data breaches will continue to grow due to big data’s wider adoption. There is also an increasing body of regulation around data handling, and organizations face severe consequences for non-compliance.

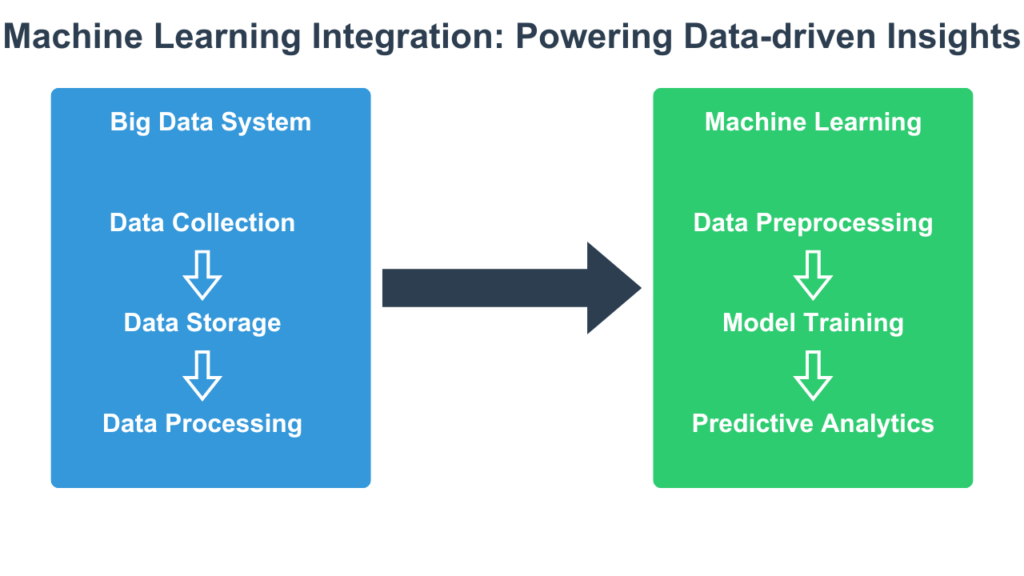

Machine Learning Integration: Powering Data-driven Insights

Symbiotic Relationship between Machine Learning and Big Data

While a distinct field, machine learning has had an almost symbiotic relationship with big data. Exponential improvement in computer hardware (faster processing power and more dense data storage) has advanced both these fields. Big data provides ever-increasing data sets as training data that has fueled machine learning progress. Machine learning complements big data by deriving deep insights into datasets where machine learning algorithms integrate seamlessly with big data processors.

Integration and Automation in Big Data Systems

Machine learning integration with big data is achieved in several ways. First, machine learning models can be embedded into existing data systems, allowing efficient processing within current infrastructures, and integration is streamlined through APIs and frameworks. Second, automated machine learning tools can be deployed to simplify the training process and reduce the need for specialized data science expertise. Third, to ensure that machine learning models stay current, ongoing model training and updating with the most recent data and transformative trends in big data can be implemented. Ultimately, machine learning can be incorporated into company workflows by automating repetitive processes and decision-making points, improving operational efficacy and efficiency. This shift is one of the transformative trends in big data that continues to shape the landscape.

Enhancing Data Preprocessing and Operational Efficiency

Machine learning also complements big data processing by helping human operators prepare and clean data. Automating many aspects of data preprocessing reduces manual intervention and error rates and frees human operators from the routing aspects of data preprocessing.

Enhancing Predictive Analytics with Machine Learning

Machine learning can enhance or improve existing big data processing in several ways. First, it can improve predictive analytics by refining models using new data and feedback. Benefits include anticipating market shifts and customer behavior changes, allowing proactive instead of reactive strategies. Second, it greatly enhances the uncovering of complex patterns and corrections, including identifying non-obvious connections. This is especially useful in detecting unusual patterns of highly sophisticated fraudulent activities. Another use is gleaning insights from highly complex biological and clinical data. Machine learning also greatly enhances forecasting, thereby improving data-driven decision-making and increasing confidence in future planning and investments.

Improving Anomaly Detection for Better Security and Efficiency

Machine learning improves personalized recommendations that are becoming essential for organizations competing for market share. Additionally, it provides anomaly detection that identifies unusual patterns in data so organizations are alerted to potential issues. Cybersecurity is an important area that is increasingly dependent on this capability. Anomaly detection is also becoming necessary in system maintenance to drive improvements in operational efficiency. Data quality and integrity are increasingly dependent upon this capability, ensuring that organizations maintain high standards of performance and security.

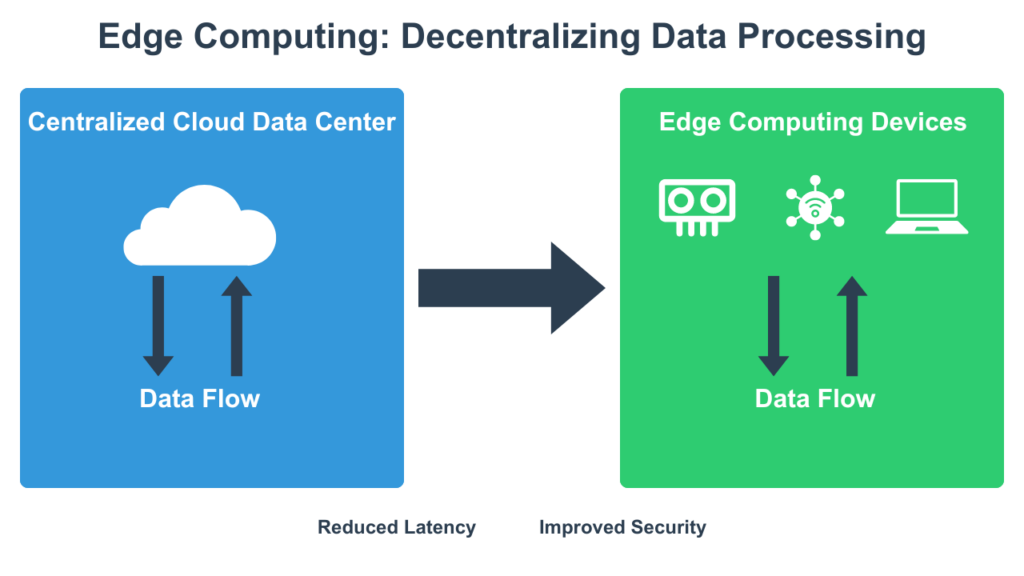

Edge Computing: Decentralizing Data Processing

The Evolution from Centralized to Edge Computing

Traditionally, centralized data centers have matched big data processing needs with the necessary processing power for big data applications. Cloud computing takes the concept of centralized data processing further, where large numbers of virtual processors can be spun up and torn down at a moment’s notice. However, with the growth of Internet of Things (IoT) devices, disadvantages around remote centralized processing become more apparent. These disadvantages include latency, responsiveness, scalability, security, and optimal resource allocation. In response to these issues, decentralized computing, better known as edge computing, started gaining traction in the 2010s and emerged as a distinct field complementing the strengths of cloud computing while addressing its disadvantages.

Addressing Latency and Cost Issues

Latency is due to the distance data needs to travel, including network devices it needs to propagate through, and the available bandwidth for the data. Remote data processing also introduces costs for data transmission. Moving data processing to the source can significantly reduce the volume of data that needs to be transmitted to the cloud, reduce associated latencies and transmission costs, and make more efficient use of the network.

Enhancing Responsiveness and Scalability

Centralized computing responsiveness is also affected because computing resources are rarely dedicated to localized data processing demands. Should cloud resources be dedicated solely, this is prohibitively expensive since this undermines the principles that cloud computing is based upon. This is also recognized in the cloud computing community, where major cloud computing operators support edge devices for caching commonly accessed data closer to consumers.

Optimal Resource Utilization and Improved Security

Edge computing provides dedicated data processing resources closer to the data sources, handling the load traditionally reserved for centralized data centers and cloud computing. Its relative proximity to data sources improves responsiveness and scalability since it is more readily configurable to the changing demands of processing loads. Distributing edge computing across multiple locations augments reliability and high availability, where a highly configurable network can better respond to failures. Edge computing allows optimal resource utilization since the specialized hardware is designed specifically for their intended tasks. In contrast, cloud computing resources need to accommodate a wide range of tasks and are not optimal for any one task.

Enhanced Cybersecurity Measures

Edge computing introduces an additional defense perimeter for cybersecurity because remote data processing increases vulnerability when traveling long distances and often insecure networks. Edge computing reduces data exposure within insecure networks and provides security enhancements to any data that remote data centers need for further processing.

Rise of Real-time Analytics: Instant Insights for Actionable Decisions

From Batch Processing to Real-time Interaction

When digital computers were initially introduced, they performed tasks in batches, not working on one task until the previous task was completed. This started to change with the introduction of timesharing, where computers switched between tasks fast, giving the impression of tasks running in parallel and providing near real-time responses to operator commands. Computer evolution has witnessed the continual shift of many use cases from batch processing to real-time interaction, and the concept of timesharing has been refined into multi-processing and multi-tasking supported by modern operating systems. However, many use cases are better suited to batch processing.

Evolving Needs for Real-time Analytics

Big data processing has followed a similar trajectory. Initially, the overwhelming majority of use cases were implemented through batch processing. However, as technologies and architectures evolved, requirements for near-instantaneous analysis of incoming data have resulted in many use cases being implemented through real-time analytics. These requirements were necessitated by several factors, with the first being data streaming, which often quickly becomes stale. Second was the growing need to be able to respond to events as they occurred. Third, timely decision-making is needed to minimize data latency. Fourth is the need to improve overall business agility with real-time feedback loops. These developments represent some of the transformative trends in big data driving the shift towards real-time analytics.

Key Use Cases for Real-time Analytics

There are several key use cases where real-time data analytics is becoming increasingly essential due to rapidly changing conditions. Both cybersecurity and fraud detection are two use cases whose dependence upon real-time analytics will only increase due to technologies being utilized for more sophisticated attacks on IT infrastructure and financial systems. The window to respond effectively is continually narrowing.

Business Operations and Real-time Analytics

Business operations is another important use case category increasingly dependent upon real-time analytics. Optimizing processes and resource consumption is an important area for real-time analytics, where real-time feedback is necessary for operational adjustments. Energy management systems are an important subcategory, given the increasing drive for energy efficiency. The other example is supply chain management, where smooth operation depends on tight coordination between suppliers, manufacturers, and distributors. Real-time visibility into inventory levels and logistics will reduce delays and inefficiencies in the supply chain process.

Customer Management and Dynamic Pricing

Real-time analytics also benefits use cases around customer management and dynamic pricing. Improved response times to customer interactions improve customer experience and enable real-time feedback and analysis. Dynamic pricing benefits from adjusting prices in real-time based on demand, supply, and market conditions.

Decision Support Systems and Real-time Feedback

Real-time analytics benefits decision support systems by providing analytics of fresh information and providing real-time feedback on decision outcomes.

Reshaping Industries: Big Data’s Impact Across Sectors

The introduction of big data is changing numerous industries, including healthcare, retail, banking, manufacturing, transportation, agriculture, entertainment, and education. This section provides several examples of transformative trends in big data and their impact on these sectors.

Healthcare: Enhancing Diagnostics and Personalized Care

Integrating AI with big data enhances diagnostic accuracy for healthcare professionals. Data analysis and data-driven insights allow healthcare professionals to tailor individual treatments better and optimize patient care plans considering genetic and medical history. Predictive models are used to predict adverse events (including disease outbreaks), allowing timely response and prevention, ultimately reducing healthcare costs.

Retail: Personalizing Customer Experiences

Data analytics for purchasing behavior allows retailers to create more detailed customer segments. Retailers can also analyze customer behavior to create profiles for individual customers, and this is used to build personalized marketing strategies for each customer and meet their unique needs. Big data also allows real-time data to be used as feedback for dynamic marketing strategies.

Banking: Predictive Analytics and Fraud Detection

Predictive analytics has become important for identifying and reducing market and financial risk. Thorough data analysis is now a crucial part of credit scoring. Finally, real-time monitoring is the foundation of fraud detection systems.

Manufacturing: Optimizing Processes and Maintenance

Data analysis of the manufacturing process improves product consistency and production process quality. Predictive maintenance models facilitate the reduction of maintenance expenses and downtime.

Transportation: Improving Efficiency and Reliability

Route optimization from real-time traffic data is essential for making transportation more timely, reliable, and fuel-efficient. Big data also predicts vehicle maintenance needs, thereby preventing breakdowns and delays, and provides data-driven decision-making to enhance fleet management.

Agriculture: Enhancing Crop Yields and Resource Management

Agriculture is becoming a major beneficiary of big data deployment to improve crop yield. Big data allows farmers to use satellite and sensor data to monitor crop health and soil conditions. This data is further analyzed to apply more precise amounts of water, fertilizer, and pesticides.

Conclusion: A Survey of Big Data Trends

This article is a high-level survey of big data trends and is designed to make the reader acquainted with these trends.